10. Web Applications

1. SSM Asset Identification

Take the results from the higher level Asset Identification in the 30,000’ View chapter of Fascicle 0. Remove any that are not applicable, add any relevant from previous chapters, add any newly discovered. Here are some to get you started:

- Ownership. Similarly as addressed in the VPS chapter, Do not assume that ownership, or at least control of your server(s) is something you will always have. Ownership is often one of the first assets an attacker will attempt to take from a target in order to execute further exploits. At first this may sound strange, but that is because of an assumption you may have that you will always own (have control of) your web application. Hopefully I dispelled this myth in the VPS chapter. If an attacker can take control of your web application (own it/steal it/what ever you want to call the act), then they have a foot hold to launch further attacks and gain other assets of greater value. The web application itself will often just be a stepping stone to other assets that you assume are safe. With this in mind, your web application is an asset. On the other hand you could think of it as a liability. Both may be correct. In any case, you need to protect your web application and in many cases take it to school and teach it how to protect itself. I cover that under the Insufficient Attack Protection section

- Similarly to the Asset Identification section in the VPS chapter, Visibility is an asset that is up for grabs

- Intellectual property or sensitive information within the code or configuration files such as email addresses and account credentials for the likes of data-stores, syslog servers, monitoring services. We address this in Management of Application Secrets

- Sensitive Client/Customer data.

- Sanity and peace of mind of people within your organisation. Those that are:

- Well informed

- Do not cut corners that should not be cut

- Passionate and driven to be their best

- Smart

- Humble yet fearless

- Recognise technical debt and are professional enough to speak up

- Engaged and love passing on their knowledge to others are truly an asset.

Some of the following risks will threaten the sanity of these people if the countermeasures are not employed.

2. SSM Identify Risks

Go through the same process as we did at the top level, in the 30,000’ View chapter of Fascicle 0, but for Web Applications. Also MS Application Threats and Countermeasures is useful.

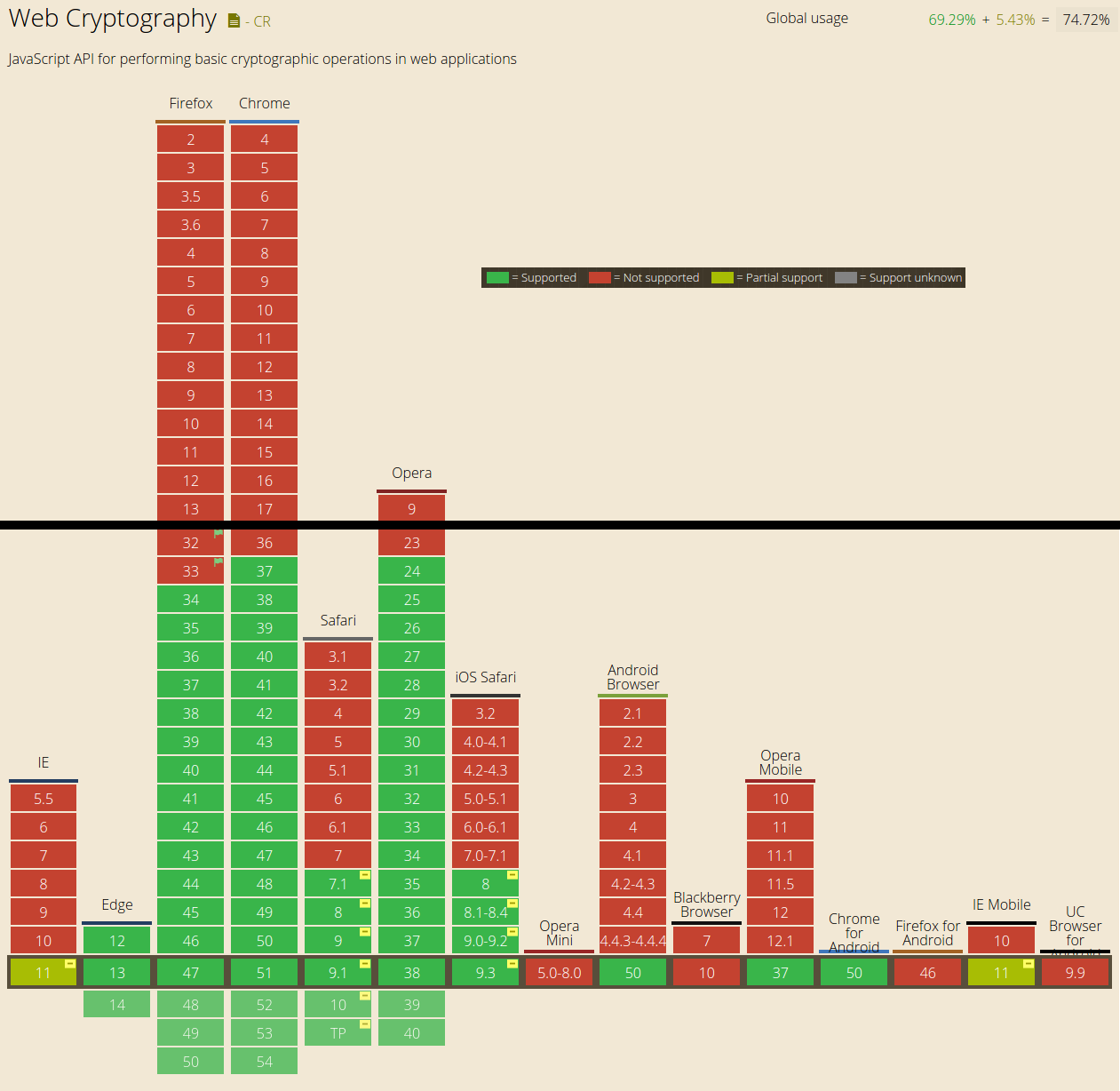

The following is the OWASP Top 10 vulnerabilities for 2003, 2004, 2007, 2010, 2013 and 2017.

As you can see there are some vulnerabilities that we are just not getting better at removing. I’ve listed them in order of most consistent to not quite as consistent:

- Injection (and the easiest to fix)

- Broken Authentication and Session Management

- XSS

- Insecure Direct Object References

- Missing Function Level Access Control

- We have gotten a little better at reducing CSRF with using the likes of the synchroniser token pattern, and possibly using LocalStorage more than cookies

“Using Components with Known Vulnerabilities” is one that I think we are going to see get worse before it gets better, due to the fact that we are consuming a far greater amount of free and open source. With the increase of free and open source package repositories, such as NPM, NuGet and various others, we are now consuming a far greater number of packages without vetting them before including them in our projects. This is why I’ve devoted a set of sections (“Consuming Free and Open Source”) within this chapter to this problem. There are also sections devoted to this topic in the VPS and Network chapters titled “Using Components with Known Vulnerabilities”. It’s not just a problem with software. We are trying to achieve more, with less of our own work, so in many cases we are blindly trusting other sources. From the attackers perspective, this is an excellent vector to compromise for maximum exploitation.

Lack of Visibility

I see this as an indirect risk to the asset of web application ownership. The same sections in the VPS and Network chapters may also be worth reading if you have not already, as there is some crossover.

Not being able to introspect your application at any given time or being able to know how the health status is, is not a comfortable place to be in and there is no reason you should be there.

Insufficient Logging and Monitoring

Can you tell at any point in time if someone or something is:

- Using your application in a way that it was not intended to be used

- Violating policy. For example circumventing client side input sanitisation.

How easy is it for you to notice:

- Poor performance and potential DoS?

- Abnormal application behaviour or unexpected logic threads

- Logic edge cases and blind spots that stake holders, Product Owners and Developers have missed?

- Any statistics that may be helpful in diagnosing any application specific issue

Lack of Input Validation, Filtering and Sanitisation

Generic

The risks here are around accepting untrusted data and parsing it, rendering it, executing it or storing it verbatim to have the same performed on it at a later stage.

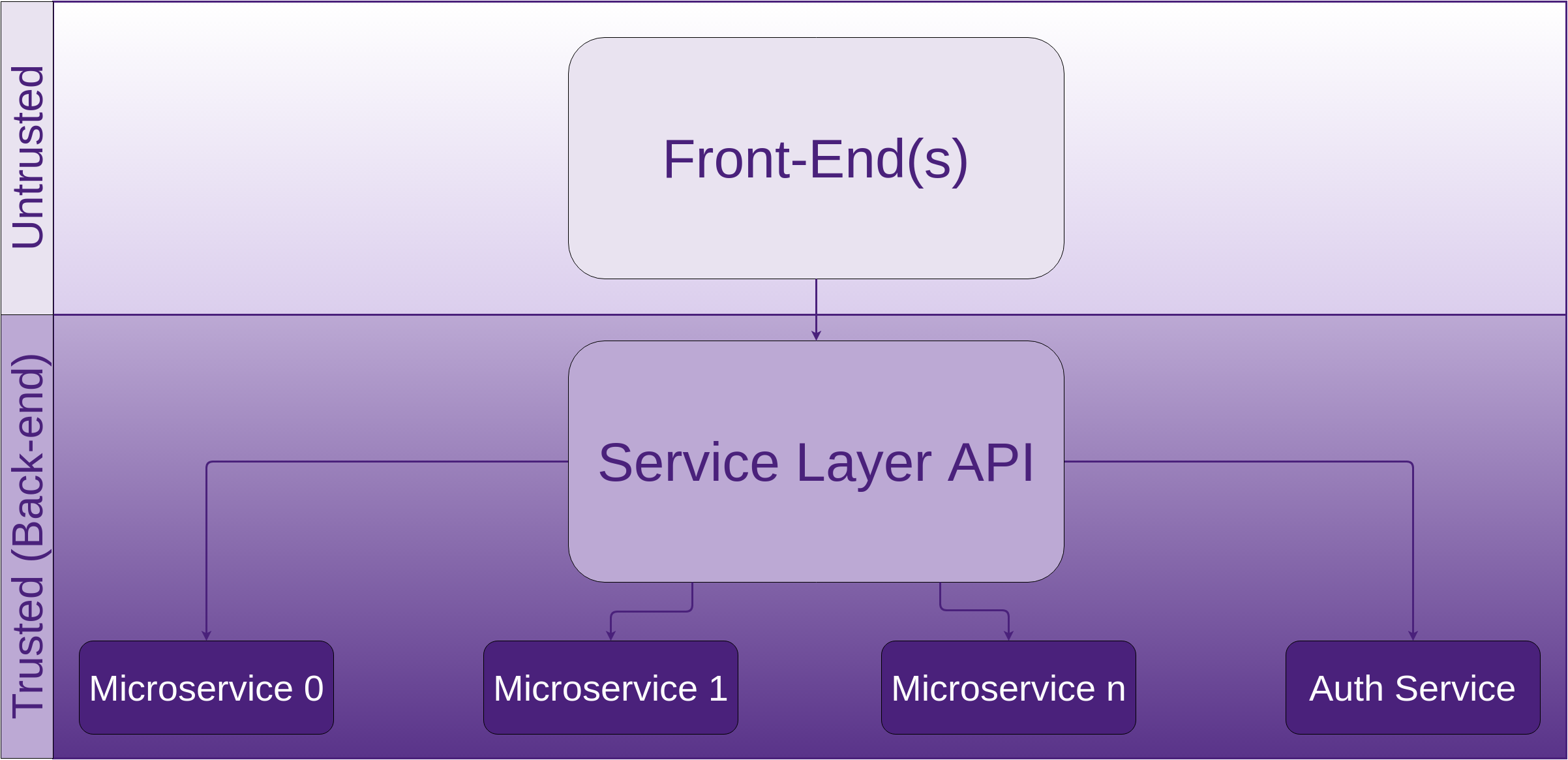

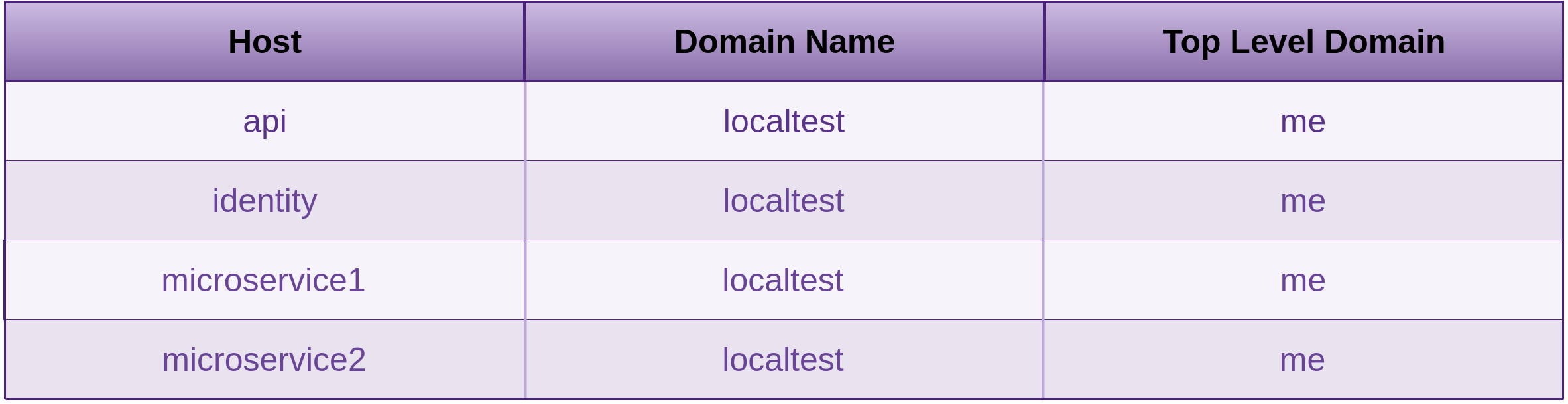

Untrusted territory is usually a location that is not close to your back-end executing code. If your back-end is in the cloud that you do not control, I.E. not your hardware, not your staff running it, then you have serious potential issues there as well that you may want to address. I’ve discussed in depth what these issues are in the previous chapters and how to mitigate the risks. Anywhere outside of your local network is untrusted. Inside your local network is semi-trusted. The amount of trust you afford depends on the relationships you have with your staff, how large your staff base is, how large your network is, how APs are managed and many of the other issues I have discussed in the previous chapters, especially Network, and Physical and People from Fascicle0. The closer data gets to the executing back-end code, the less untrustworthy the territory should be. Of course there are many exceptions to this rule as well.

So I could say, just do not trust anyone or anything, but there comes a time and a place that you have to afford trust. Just keep it as close to the back-end executing code as possible.

If you parse, render or execute data that you can not trust, that is data accepted by an unknown user, whether it be through a browser, intercepting communications somewhere along untrusted territory.

The web stack today is very complicated. We have URLs, CSS, JavaScript, XML and its derivatives, templating languages and frameworks. Most of these languages have their own encoding, quoting, commenting, escaping, and most of which can be nested inside of each other. Making the web browser a very treacherous place in terms of security. Browsers have made their living out of being insanely convoluted at interpreting all of this. All scripts have the same level of privilege within the browser.

This overly complex environment leads to confusion and a perfect haven for hiding malicious code chunks.

Below are a few techniques widely accepted that we need to use on any untrusted data before it makes its travels through your system to be stored or hydrated.

What is Validation

Decide what input is valid by way of a white list (list of input characters that are allowed to be received). Often each input field will have a different white list. Validation is binary, the data is either allowed to be received or not allowed. If it is not allowed, then it is rejected. This is usually not to complicated to work out what is good, what is not and thus rejected. There are a few strategies to use for white listing, such as the actual collection of characters or using regular expressions.

There are other criteria that you can validate against as well, such as:

- Field lengths

- Whether or not something is required

- Whether or not something is a specific type or in a specific format and many other criteria

// The NodeJS module express-form provides validation, filtering

// and some light weight sanitisation as Express middleware.

var form = require('express-form');

var fieldToValidate = form.field;

// trim is filtering.

fieldToValidate('name').trim().

// required is validation.

required().

// minLength is validation.

minLength(2).

// maxLength is validation.

maxLength(50).

// is is validation.

is(/^[a-zA-Z ']+$/)

// There is no sanitisation here.

What is Filtering

When some data can pass through (be received) and some is captured by the filter element (thou shalt not pass). OWASP has the RSnake donated seminal XSS cheat sheet which has many tests you can use to check your vulnerability stance to XSS exploitation. This is highly recommended.

What is Sanitisation

Sanitisation of input data is where the input data whether it is in your white list or not is accepted and transformed into a medium that is no longer dangerous. Now it will probably go through validation first. The reason you sanitise character signatures (may be more than single characters, character combinations) not in your white list is a defence in depth strategy. The white list may change in the future due to a change in business requirements and the developer may forget to revise the sanitisation routines. Always think of any security measure as standing on its own when you create it, but standing alongside many other security measures once done.

You need to know which contexts your input data will pass through in order to sanitise correctly for all potential execution contexts. This requires lateral thinking and following all execution paths. Both into and out of your application (once rehydrated), being pushed back to the client. We cover this in depth below in the “Example in JavaScript and C#” section in the countermeasures.

Cross-Site Scripting (XSS)

The following hands on hack demonstrates what a XSS attack is and provides a little insight into some of the damages that it can cause.

A XSS attack is one in which untrusted data enters a web application usually through a web request and is not stopped by validation, filtering or sanitisation. The data is then at some point sent to someone using the same web application without being validated, filtered or sanitised.

The data in question is executed by the browser, usually JavaScript, HTML or Flash. What the code does is up to the creativity of the initiator.

One way this can be carried out trivially is to simply buy an add and have that injected into the end users browser. The malicious code can be easily hidden by simply changing additional scripts that are fetched once live. Even fetching a script that fetches a different script under the attackers control.

The main two different types of XSS are Stored/Persistent or Type I and Reflected/Non-Persistent or Type II.

Stored attacks are where the injected code is sent to the server and stored in a medium that the web application uses to retrieve it again to send to another user.

Reflected attacks use the web application in question as a proxy. When a user makes a request, the injected code travels from another medium through the web application (hence the reflecting) and to the end user. From the browsers perspective, the injected code came form the web application that the user made a request to.

The following attack was the first one of five that I demonstrated at WDCNZ in 2015. The attack after this one was a credential harvest based on a spoofed website that hypothetically was fetched due to a spear phishing attack. That particular attack can be found in the “Spear Phishing” section of the People chapter in Fascicle 0.

Theoretically in order to get to the point where you carry out this attack, you would have already been through several stages first. If you are carrying out a penetration testing engagement, it is likely you would have been through the following:

- Information gathering (probably partly even before you signed the contract with your client)

- Vulnerability scanning

- Vulnerability searching

If you are working within a development team you may have found out some other way that your project was vulnerable to XSS.

How ever you got to this point, you are going to want to exhibit the fault. One of the most effective ways to do this is by using BeEF. BeEF clearly shows what is possible when you have an XSS vulnerability in scope and is an excellent tool for effortlessly demonstrating the severity of the fault to all team members and stakeholders.

One of BeEFs primary reasons to exist is to exploit the fact that many security philosophies seem to forget how easy it is to go straight through hardened network perimeters and attack the soft mushy insides of a sub network as discussed in the Fortress Mentality section of the Physical chapter in Fascicle 0. Exposing XSS faults is one of BeEFs attributes.

You can find the video of how this attack is played out at http://youtu.be/92AWyUfJDUw.

Cross-Site Request Forgery (CSRF)

This type of attack depends on a fraudulent web resource, be it a website, email, instant message, or program that causes the targets web browser to perform an unintentional action on a website that the target is currently authenticated with.

CSRF attacks target functionality that change state on the server (POST, PUT, DELETE) that the target is currently authenticated with, requests such as changing the targets credentials, making a purchase, moving money at their bank. Forcing the target to retrieve data does not help the attacker.

If you are using cookies (authentication that the browser automatically sends from the client) for storage of client-side session artefacts, CSRF is your main concern, but XSS is also a possible attack vector.

The target needs to submit the request either intentionally or unintentionally. The request could appear to the target as any action. For example:

- Intentionally by clicking a button on a website that does not appear to have anything in common with the website that the target is already authenticated with by way of session Id stored in a cookie

- Unintentionally by loading a page not appearing to have anything in common with the website that the target is already authenticated with by way of session Id stored in a cookie, that for example contains an

<iframe>with a form and a script block inside

For No. 1 above, the action attribute of the form for which the target submits could be to the website that the target is already authenticated with, thus a fraudulent request is issued from a web page seemingly unrelated to the website that the target is already authenticated with. This can be seen in the second example below on line 4. The browser plays its part and sends the session Id stored in the cookie. NodeGoat has an excellent example of how this plays out:

Below code can be found at https://github.com/OWASP/NodeGoat/:

1 <form role="form" method="post" action="/profile">

2 <div class="form-group">

3 <label for="firstName">First Name</label>

4 <input

5 type="text"

6 id="firstName"

7 name="firstName"

8 value="{{firstName}}"

9 placeholder="Enter first name">

10 </div>

11 <div class="form-group">

12 <label for="lastName">Last Name</label>

13 <input

14 type="text"

15 id="lastName"

16 name="lastName"

17 value="{{lastName}}"

18 placeholder="Enter last name">

19 </div>

20 <div class="form-group">

21 <label for="ssn">SSN</label>

22 <input

23 type="text"

24 id="ssn"

25 name="ssn"

26 value="{{ssn}}"

27 placeholder="Enter SSN">

28 </div>

29 <div class="form-group">

30 <label for="dob">Date of Birth</label>

31 <input

32 type="date"

33 id="dob"

34 name="dob"

35 value="{{dob}}"

36 placeholder="Enter date of birth">

37 </div>

38 <div class="form-group">

39 <label for="bankAcc">Bank Account #</label>

40 <input

41 type="text"

42 id="bankAcc"

43 name="bankAcc"

44 value="{{bankAcc}}"

45 placeholder="Enter bank account number">

46 </div>

47 <div class="form-group">

48 <label for="bankRouting">Bank Routing #</label>

49 <input

50 type="text"

51 id="bankRouting"

52 name="bankRouting"

53 value="{{bankRouting}}"

54 placeholder="Enter bank routing number">

55 <p class="help-block">

56 Must be entered as digits with a suffix of #. For example: 0198212# </p>

57 </div>

58 <div class="form-group">

59 <label for="address">Address</label>

60 <input

61 type="text"

62 id="address"

63 name="address"

64 value="{{address}}"

65 placeholder="Enter address">

66 </div>

67 <input type="hidden" name="_csrf" value="{{csrftoken}}" />

68 <button type="submit" class="btn btn-default" name="submit">Submit</button>

69 </form>

The below attack code can be found at the NodeGoat tutorial for CSRF, along with the complete example that ckarande crafted, and an accompanying tutorial video of how the attack plays out:

1 <html lang="en">

2 <head></head>

3 <body>

4 <form method="POST" action="http://TARGET_APP_URL_HERE/profile">

5 <h1> You are about to win a brand new iPhone!</h1>

6 <h2> Click on the win button to claim it...</h2>

7 <!--User is authenticated, let's force them to change some values-->

8 <input type="hidden" name="bankAcc" value="9999999"/>

9 <input type="hidden" name="bankRouting" value="88888888"/>

10 <input type="submit" value="Win !!!"/>

11 </form>

12 </body>

13 </html>

For No. 2 above, is similar to No. 1, but the target does not have to action anything once the fraudulent web page is loaded. The script block submits the form automatically, thus making the request to the website that the target is already authenticated with, the browser again playing its part in sending the session Id stored in the cookie:

<form id="theForm" action="http://TARGET_APP_URL_HERE/profile" method="post">

<input type="hidden" name="bankAcc" value="9999999"/>

<input type="hidden" name="bankRouting" value="88888888"/>

<input type="submit" value="Win !!!"/>

</form>

<script type="text/javascript">

document.getElementById('theForm').submit();

</script>

Some misnomers:

- A form is not essential to carry out CSRF successfully

- XSS is not essential to carry out CSRF successfully

- HTTPS does nothing to defend against CSRF

Injection

injection occurs when untrusted data is sent to an interpreter as part of a command or query. The attacker’s hostile data can cause the interpreter to execute commands that would otherwise not be, or accessing data without proper authorization.

In order for injection attacks to be successful, untrusted data must be unsuccessfully validated, filtered and sanitised before it reaches the interpreter.

Injection defects are often easy to discover simply by examining the code that deals with untrusted data, including internal data. These same defects are often harder to discover when black-box testing, either manually or via fuzzers. Defects can range from trivial to complete system compromise.

As part of the discovery stage, the attacker can test their queries and start to build up what they think the underlying structure looks like that they are attacking with any number of useful on-line query test tools, such as freeformatter.com. This allows the attacker to make as little noise to signal ratio as possible.

SQLi

One of the simplest and quickest vulnerabilities to fix is SQL Injection, yet it is still top of the hit lists. I am going to hammer this home some more. Also checkout the podcast I hosted with Haroon Meer as guest on Network Security for Software Engineering Radio. Haroon discussed using SQLi for data exfiltration.

There are two main problems here.

- SQL Injection

- Poor decisions around sensitive data protection. We discuss this in depth further on in this chapter in the Data-store Compromise section and even more so the Countermeasures of. Do not follow this example of a lack of well salted and quality strong key derivation functions (KDFs) used on all of the sensitive data in your own projects.

NoSQLi

NoSQL Injection is semantically very similar to SQL Injection and Command Injection with JavaScript running on the server. The main differences are in the syntax of the attack.

NoSQL data stores often provide greater performance and scaling benefits, but in terms of security, are disadvantaged due to far fewer relational and consistency checks.

There are currently over 225 types of NoSQL data stores, and each one does things differently. This of course means that if all we considered was the number of systems compared to SQL, then the likelihood of confusion around what a safe query looks like in any given NoSQL engine has increased dramatically. There is also more room for an attacker to move within NoSQL queries than with SQL due to being heavily dependent on JavaScript which is a dynamic loosely typed general purpose language rather than the far more tightly constrained declarative SQL.

Because there are so many types of NoSQL data store, crafting the attacks is often implementation specific, and because of that, the countermeasures are also implementation specific, making it even more work to provide a somewhat secure environment for your untrusted data to pass through. It often feels like there is no safe passage.

The data store queries are usually written in the programming language of the application, or buried within an API of the same language, often the ubiquitous JavaScript is used, which can be executed in the Web UI, the server side code if it is JavaScirpt, and then the particular type of NoSQL data store, so there are many more execution contexts that can be attacked.

I can not possibly cover all of the types of NoSQL data store, so the below is an example of some mongodb.

A typical SQL query used to select a user based on their username and password might look like the following:

SELECT * FROM accounts WHERE username = '$username' AND password = '$password'

If the $username and $password fields were not properly validated, filtered and sanitised, an attacker could supply

admin' --

as the username and the resulting query would look like:

-- As you can see, the password is no longer required,

-- as the rest of the query is commented out.

SELECT * FROM accounts WHERE username = 'admin' --' AND password = ''

The equivalent of a MongoDB query could be:

db.accounts.find({username: username, password: password});

One way an attacker could attempt to bypass the password if the untrusted data was not being validated would be to supply a username of:

admin

and a password of:

{$gt: ""}

The resulting query would then look like:

{username: "admin", password: {$gt: ""}}The MongoDB $gt comparison operator is used here to select those documents where the value of the password is greater than "", which always results in true because empty passwords are not permitted. Check the NodeGoat tutorial for additional information.

Command Injection

The following examples target JavaScript running on the server. If your application on the server is written in some other language, then the attacker just needs to supply code of that language to be executed.

The following functions are easily exploited by an attacker by simply supplying the code they want to execute as a string of text input:

The JavaScript eval function executes the string of code it is passed with the privileges of the caller.

The JavaScript setTimeout and setInterval functions allow you to pass a string of code as the first argument, which is compiled and executed on timer expiration (with setTimeout), and at intervals (with setInterval).

The JavaScript Function constructor takes an arbitrary number of string arguments which are used as the formal parameter names within the function body that the constructor creates. The last argument passed to the Function constructor is also a string, but it contains the statements that are to be executed as the Function object that is returned.

The purposely vulnerable Node Web Application NodeGoat, provides some simple examples in the form of executable code including some absolute bare minimum countermeasures, a tutorial with videos of exploiting Command Injection in the form of a Denial of Service (DoS), by passing

while(1)

or

process.exit()

or

process.kill(process.pid)

through some input fields of the Web UI. It also covers discovery of the names of the files on the target web servers file system:

// Read the contents of the current directory.

res.end(require('fs').readdirSync('.').toString());

// Read the contents of the parent directory.

res.end(require('fs').readdirSync('..').toString());

res.end(require('fs').readFileSync(filename));

The attacker can take this further by writing new files and executing files on the server.

Many web applications take untrusted data, often from HTTP requests and pass this data directly to the Operating System, its programs, or even other systems, often by APIs, which is now addressed by the OWASP Top 10 A10 Under protected APIs

Also check out the Additional Resources chapter for command injection attack techniques.

XML Injection

XML injection techniques usually consist of a discovery stage followed by full exploitation if successful:

- Attempting to create invalid XML document by injecting various XML metacharacters (metacharacter injection):

- Single quotes:

' - Double quotes:

" - Angle brackets:

>< - Comment tags:

<!--and--> - Ampersand:

& - CDATA section delimiters:

<![CDATA[and]]>allowing an attacker to form executable code

- Single quotes:

- Injecting an untrusted XML document which is accepted and not contextually validated, filtered or sanitised by the parser. That is if XML documents are accepted. Exploiting the XML parser that is configured to resolve, validate and process external entities in the form of Document Type Definitions (DTDs) and XML Schema Definitions (XSDs). This is known as XML External Entity (XXE) exploitation

- Attempting to modify the XML structure by injecting various XML data and Tags (tag injections)

and witnessing how the parser deals with the data.

There are a number of XML attack categories exploitable via injection that target weaknesses such as the following that Adam Bell presented at the OWASP New Zealand Day conference in 2017 that I helped to run. Adams talk was called: “XML Still Considered Dangerous:

- Parameter expansion

- XML External Entities (XXE)

- URL Invocation

Check out Adams slide-deck at the OWASP NZ Day wiki for some interesting examples.

XSLT Injection

There is a lot that can go wrong in XSLT, the following is a collection of some of the vulnerabilities to be aware of:

- Leveraging XPath to refer to parts of XML documents

- Susceptible to XXE of local and remote files, as well as include additional arbitrary XSL files

- Extract system information including file contents

- Port scanning

- Write files to file system

- Access of arbitrary databases

- Execution of arbitrary code

XPath Injection

Similarly to generic injection, when XPath incorporates untrusted data that has not been validated, filtered and sanitised based on the execution context in question, this is discussed in the “What is Sanitisation” section of the Lack of Input Validation, Filtering and Sanitisation section of Identify Risks, the intended logic of the query can be modified. This allows an attacker to inject purposely malformed data into a website, then by making educated guesses based on what is returned, including the returned data and any error messages, the attacker can build a picture of how the XML structure looks. Similar to XML Injection, the attacker will usually carry out this discovery stage followed by full exploitation if successful.

Although this attack technique is similar to SQLi, XPath has no provision for commenting out tails of expressions:

- MS SQL, MySQL and Oracle:

-- - MySQL:

# - MS Access:

%00

XPath is a standards based language, unlike SQL, its syntax is implementation independent, this allows attacks to be automated across many targets that use user input to query XML documents.

Unlike SQL where specific commands and queries are run as specific users, and thus the principle of least privilege can be applied to any given command or query based on the user running it, with XPath there is no notion of Access Control Lists (ACLs), this means that a query can access every part of the XML document. So for example, if user credentials are being stored in an XML document, they can be retrieved and allow the attacker to elevate their privileges to those of other users, perhaps administrators if the administrators credentials are stored in the XML document.

Let us use the following XML document for examples:

<?xml version="1.0" encoding="UTF-8"?>

<Users>

<User ID="1">

<FirstName>John</FirstName>

<LastName>Deer</LastName>

<UserName>jdeer</UserName>

<Password>3xp10it3d</Password>

<Type>administrator</Type>

</User>

<User ID="2">

<FirstName>Kim</FirstName>

<LastName>Carter</LastName>

<UserName>kimc</UserName>

<Password>t03457</Password>

<Type>user</Type>

</User>

</Users>

Blind injection is a technique used in many types of injection. A blind injection attack is used where the attacker does not know what the underlying query, or in the case of XPath, XML document looks like, and/or the feedback from the application reveals little detail in terms of data or error messages. Often booleanised queries and/or XML Crawling are used to facilitate blind injection attacks.

Booleanised Queries are those that return very granular true/false information based on very small amounts of data supplied by the attacker.

The following XPath query returns the first character of the first child node (no mater what it is called) of the first User:

substring((//User[1]/*[1]),1,1)

The following XPath query returns the length of the forth element (no mater what it is called) of the first User:

string-length(//User[1]/*[4])

XML Crawling allows the attacker to get to know what the XML document structure looks like.

For example, the following reveals that the number of Users is 2:

count(//Users/*)

The following reveals that the length of the value at the fourth position of the child node (no mater what it is called) of the first User is in fact 9 characters long. Is the Password nine characters long? True.

string-length(//User[position()=1]/*[position()=4])=9

The following query can be used to confirm that the first character of the fourth child node (no mater what it is called) of the first User is in fact 3:

substring((//User[1]/*[4]),1,1)="3"

Checkout the OWASP XML Crawling documentation for further details. The queries at the OWASP documentation did not work for me, hence why I have supplied ones that do.

Using booleanised queries can be very good for automated attacks, usually many of these granular tests will need to be performed, but because they are so small, an attacker can aggregate them, making them very versatile.

Continuing on: If our target application contains logic to retrieve the account type so long as the user provides their username and password, that logic may look similar to the following, once the legitimate administrator has entered their credentials:

string(//User[UserName/text()='jdeer' and Password/text()='3xp10it3d']/Type/text())

If the application does not take the countermeasures discussed, and the attacker enters the following:

Username:

' or '1'='1

Password:

' or '1'='1

Then the above query will look more like the following:

string(//User[UserName/text()='' or '1'='1' and Password/text()='' or '1'='1']/Type/text())

Which although the attacker has not supplied a valid UserName or Password, according to the XPath query, they are still authenticated, because the query will always evaluate to true. This is called authentication bypass for obvious reasons. You may notice that this attack looks very similar to many SQLi attacks.

The available XPath functions and XSLT specific additions to XPath are documented at Mozilla Developer Network (MDN)

Technically, XPath is used to select parts of an XML document, it has no provision for updating, in saying that, command injection can be used to modify data that XPath returns. Standard language libraries usually provide functionality for modifying XML documents, along with the XML Data Modification Language (DML) that we will discuss soon in the next section.

XQuery Injection

XQuery being a superset of XPath, suffers from the same vulnerabilities as XPath, plus the SQL-like FOR, LET, WHERE, ORDER BY, RETURN (FLWOR) expression abilities. Hopefully coming as no surprise, the attack vectors are a combination of those discussed in XPath Injection and SQLi. The canonical example is provided below thanks to projects.webappsec.org:

<?xml version="1.0" encoding="UTF-8"?>

<userlist>

<user category="group1">

<uname>jdeer</uname>

<fname>John</fname>

<lname>Deer</lname>

<status>active</status>

</user>

<user category="admins">

<uname>kimc</uname>

<fname>Kim</fname>

<lname>Carter</lname>

<status>active</status>

</user>

<user category="group2">

<uname>bobm</uname>

<fname>Bob</fname>

<lname>Marley</lname>

<status>deceased</status>

</user>

<user category="group1">

<uname>MSmith</uname>

<fname>Matthew</fname>

<lname>Smith</lname>

<status>terminated</status>

</user>

</userlist>

If the query to retrieve the user bobm was using a string literal from the users input (bobm), it may look similar to the following:

doc("users.xml")/userlist/user[uname="bobm"]

An attacker could exploit the query by providing:

something" or ""="

which would form the following query:

doc("users.xml")/userlist/user[uname="something" or ""=""]

which upon execution would yield all of the users.

Something else to keep in mind is that XQuery also has an extension called the XML Data Modification Language (DML), which is commonly used to update (insert, delete and replace value of) XML documents.

LDAP Injection

The same generic injection information is applicable to LDAP

Successful LDAP injection attacks could result in the granting of permissions to unauthorised queries and/or adding, deleting or modifying of entries in the LDAP tree. Because LDAP is used extensively for user authentication, this is a particularly viable area for attackers.

As we discussed the usage of metacharacters in XML Injection, LDAP search filter metacharacters can be injected into the dynamic parts of the queries and thus executed by the application.

LDAP uses Polish notation (PN), or normal Polish notation (NPN), or simply prefix notation, which has the distinguishing feature of placing operators to the left of their operands

One of the canonical examples, is a web application that takes the users credentials and verifies their authenticity, if successful, the user is authenticated and granted access to specific resources.

The LDAP filter used to check whether the supplied username and password of a user is correct, might look similar to the following:

With user input:

and:

the search filter would look similar to the following:

The (&) we used that did not contain any embedded filters is called the LDAP true filter, and will always match any target entry. This allows the attacker to compare a valid uid with true, the attacker can subsequently use any password as only the first filter is processed by the LDAP server.

Captcha

Lack of captchas are a risk, but so are captchas themselves…

What is the problem here? What are we trying to stop?

Bots submitting. What ever it is, whether advertising, creating an unfair advantage over real humans, link creation in attempt to increase SEO, malicious code insertion, you are more than likely not interested in accepting it.

What do we not want to block?

People submitting genuinely innocent input. If a person is prepared to fill out a form manually, even if it is spam, then a person can view the submission and very quickly delete the validated, filtered and possibly sanitised message.

Management of Application Secrets

Also consider reviewing the Storage of Secrets subsections in the Cloud chapter.

- Passwords and other secrets for things like data-stores, syslog servers, monitoring services, email accounts and so on can be useful to an attacker to compromise data-stores, obtain further secrets from email accounts, file servers, system logs, services being monitored, etc, and may even provide credentials to continue moving through the network compromising other machines.

- Passwords and/or their hashes travelling over the network.

Data-store Compromise

The reason I have tagged this as moderate is because if you take the countermeasures, it doesn’t have to be a disaster.

The New Zealand Intelligence Service recently told Prime Minister John Key that this was one of the 6 top threats facing New Zealand. “Cyber attack or loss of information and data, which poses financial and reputational risks.”

There are many examples of data-store compromise happening on a daily basis. If organisations took the advice I outline in the countermeasures section the millions of users would not have their identifies stolen. Sadly the advice is rarely followed. The Ashley Madison debacle is a good example. Ashley Madisons entire business relied on its commitment to keep its clients (37 million of them) data secret, provide discretion and anonymity.

“Before the breach, the company boasted about airtight data security but ironically, still proudly displays a graphic with the phrase “trusted security award” on its homepage”

“We worked hard to make a fully undetectable attack, then got in and found nothing to bypass…. Nobody was watching. No security. Only thing was segmented network. You could use Pass1234 from the internet to VPN to root on all servers.”

“Any CEO who isn’t vigilantly protecting his or her company’s assets with systems designed to track user behavior and identify malicious activity is acting negligently and putting the entire organization at risk. And as we’ve seen in the case of Ashley Madison, leadership all the way up to the CEO may very well be forced out when security isn’t prioritized as a core tenet of an organization.”

Dark Reading

Other notable data-store compromises were LinkedIn with 6.5 million user accounts compromised and 95% of the users passwords cracked in days. Why so fast? Because they used simple hashing, specifically SHA-1. EBay with 145 million active buyers. Many others coming to light regularly.

Are you using well salted and quality strong key derivation functions (KDFs) for all of your sensitive data? Are you making sure you are notifying your customers about using high quality passwords? Are you informing them what a high quality password is? Consider checking new user credentials against a list of the most frequently used and insecure passwords collected. I discussed Password Lists in the Tooling Setup chapter. You could also use wordlists targeting the most commonly used passwords, or create an algorithm that works out what an easy to guess password looks like, and inform the user that it would be easy to guess by an attacker.

Cracking

Remember we covered Password Profiling in the People chapter where we essentially made good guesses, both manually and with the use of profiling tools, around the end users passwords, and then feed the short-list to a brute forcing tool. Here we already have the password hashes. We just need to find the source passwords that created the hashes. This is where cracking comes in.

When an attacker acquires a data-store or domain controller dump of hashed passwords, they need to crack the hashes in order to get the passwords. How this works, is the attacker will find or create a suitable password list of possible passwords. The tool used will attempt to create a hash of each of these words based on the hashing algorithm used on the dump of hashes. Then compare each dumped hash with the hashes just created. When a match is found, we know that the word in our wordlist used to create the hash that matches the dumped hash is in fact a legitimate password.

A smaller wordlist is going to take less time to create the hashes. As this is often an off-line attack, a larger wordlist is often preferred over a smaller one because the number of generated hashes will be greater, which when compared to the dump of hashes means the likelihood of a greater number of matches is increased.

As part of the hands on hack in the SQLi section, we obtained the password hashes via SQL injection from the target web application DVWA (part of the OWASP Broken Web Application suite (VM)). We witnessed how an attacker could obtain the passwords from the hashed values retrieved from the database.

Lack of Authentication, Authorisation and Session Management

Also brought to light by the OWASP Top 10 risks “No. 2 Broken Authentication and Session Management”.

With this category of attacks, your attacker could be either someone you do or do not know. Possibly someone already with an account, an insider maybe, looking to take the next step which could be privilege escalation or even just alteration so that they have access to different resources by way of acquiring other accounts. Some possible attack vectors could be:

- Password acquisition: by way of data-store theft (off-line attack) or poor password hashing strategies (susceptible to off-line and on-line attacks), discussed in the Countermeasures section but in more depth in the Management of Application Secrets sections

- Passwords or sessionIds travelling over unsecured channels susceptible to Man In the Middle (MItM) attacks, discussed in the Countermeasures section but also refer to the TLS Downgrade sections of the Network chapter

- Buggy Session Management, SessionIds exposed in URLs

- Faulty logout (not invalidating authentication tokens)

- Faulty remember me functionality

- Long session time-outs can exacerbate other weak areas of defence

- Secret questions

- Updating account details

In the Countermeasures section I go through some mature and well tested libraries and other technologies, and details around making them fit into a specific business architecture.

Consider what data could be exposed from any of the accounts and how this could be used to gain a foot hold to launch further alternative attacks. Each step allowing the attacker to move closer to their ultimate target, the ultimate target being something hopefully discussed during the Asset Identification phase or taking another iteration of it as you learn and think of additional possible targeted assets.

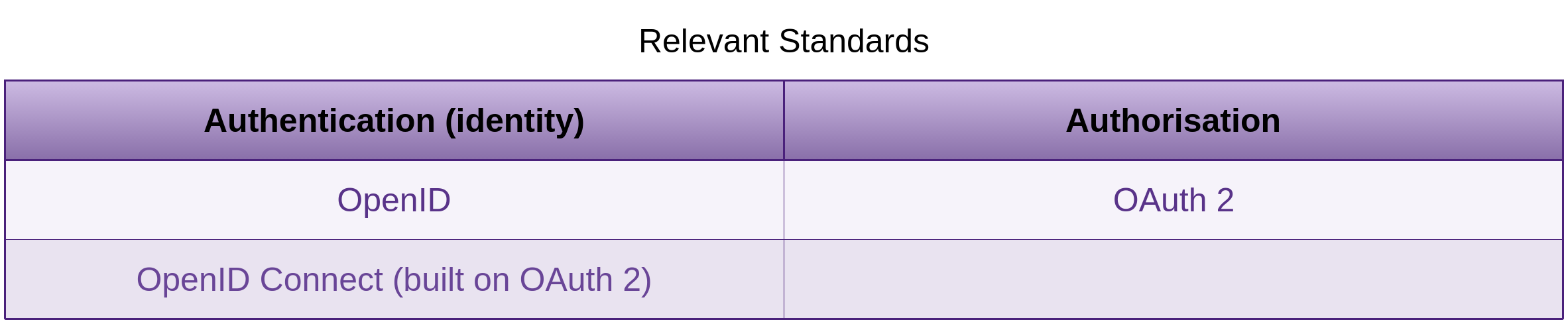

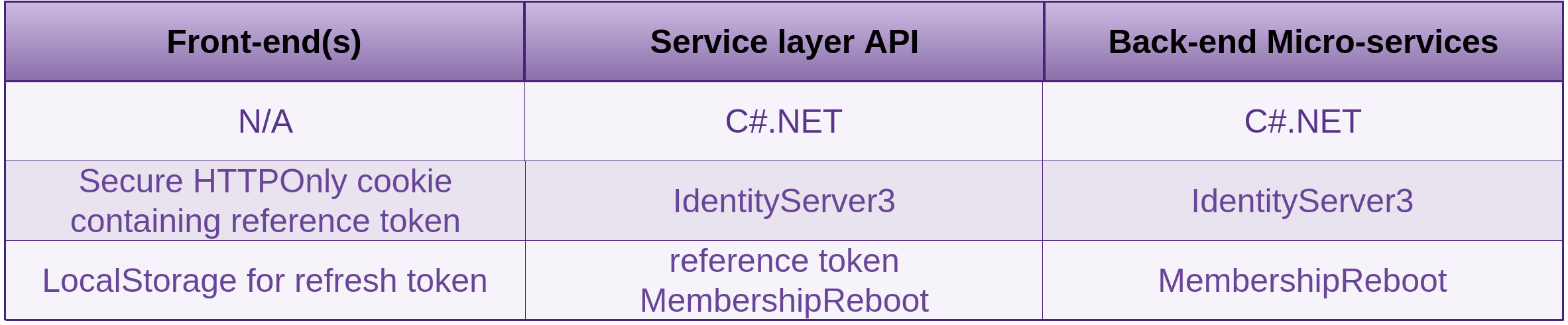

Often the line between the following two concepts gets blurred, sometimes because where one starts and one ends is often not absolute or clear, and sometimes intentionally. Neither help new comers and even those used to working with the concepts get to grips with which is which and what the responsibilities of each include.

What is Authentication

The process of determining whether an entity (be it person or something else) is who or what it claims to be.

Being authenticated, means the entity is known to be who or what it/he/she claims to be.

What is Authorisation

The process of verifying that an entity (usually requesting)(be it person or something else) has the right to a resource or to carry out an action, then granting permission requested.

Being authorised, means the entity has the power or right to certain privileges.

Don’t build your own authentication, authorisation or session management system unless it’s your core business. It’s too easy to get things wrong and you only need one defect in order to be compromised. Leave it to those that have already done it or do it as part of their core business and have already worked through the defects.

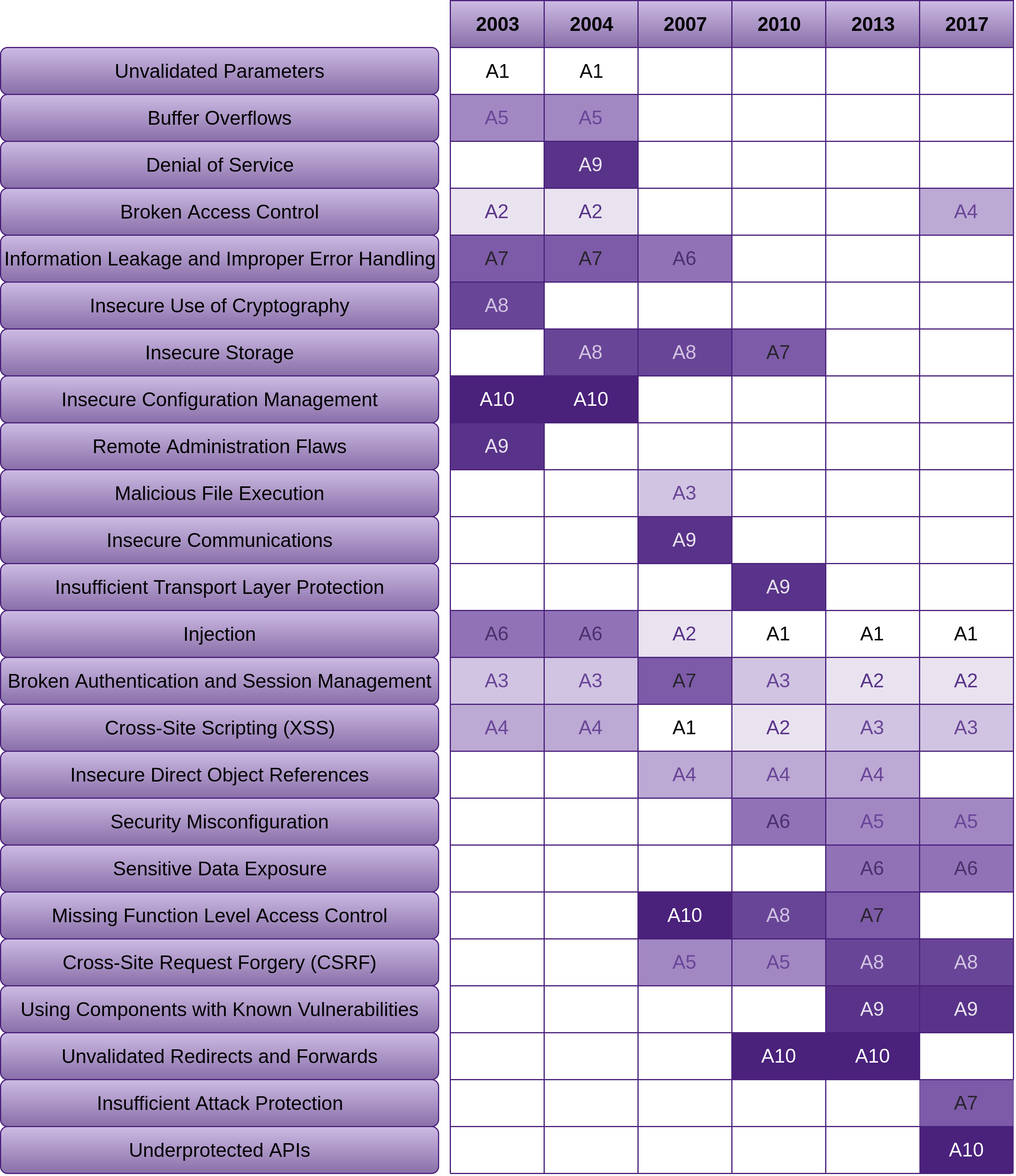

Cryptography on the Client (AKA Untrusted Crypto)

The things I see that seem to get many developers into trouble:

- Lack of understanding of what the tool is, where and how it should be used

- Use of low-level primitives with no to little knowledge of which are most suitable for which purposes. How to make them work together. How to use and configure them, so as to not introduce security defects, usually due to not understanding how the given primitive is designed and its purpose of use

- Many libraries have either:

- To many options which just helps to create confusion for developers as to what to use for which purpose. The options they do have are not the best for their intended purpose

- The creators may be developers, but are not cryptographers

There are so many use cases with the wider cryptography topic. There is no substitute for learning about your options, which to use in any given situation and how to use them.

JavaScript crypto is wrought with problems.

For example, there is nothing safe about having JavaScript in the browser store, read or manage an entropy pool. This gets a little better with the Web Cryptography APIs (discussed in the Countermeasures section) window.crypto.getRandomValues().

Little trust should be placed in the browser and how it generally fails to manage attacks due to the complexities discussed in both Risks and Countermeasures sections of “Lack of Input Validation, Filtering and Sanitisation”. The browser has enough trouble getting all the technologies to work together and inside of each other, let-a-lone stopping malicious code fragments that do work from working. As most web developers have worked out, the browser is purposely designed to make even syntactically incorrect code work correctly.

The browser was designed to download and run potentially malicious, untrusted code from arbitrary locations on the fly. The browser in most cases doesn’t know what malicious code is, and often the first payload is not malicious, but simply fetches other code that may fetch other code that eventually will be malicious.

The JavaScript engines are constantly changing, meaning that the developers expectations of relying on implementation details of the execution environments to stay somewhat stable, will be constantly let down.

Web development is hard. Web security is harder still.

The following is a list of the best JavaScript crypto libraries we have available to us. I purposely left many out, as I don’t want to muddy the waters and add more lower quality options:

-

Stanford JavaScript Crypto Library (SJCL)

- Home page: http://bitwiseshiftleft.github.io/sjcl/ The default key stretching factor appears to be 1000. This should probably be adaptive to match the advances in hardware technology, as discussed under the MembershipReboot section.

- SJCL demo page: http://bitwiseshiftleft.github.io/sjcl/demo/ which helps to explain some of the creators reasoning.

- Source Code: https://github.com/bitwiseshiftleft/sjcl/

- NPM package: https://www.npmjs.com/package/sjcl. Yes the download count is way less than Forge, but SJCL is first in my list for a reason. SJCL provides minimal options of algorithms and cipher modes, etc. Just the best, to help ease the burden of having to research many algorithms and cipher modes to find out which are now broken.

What’s also really good to see is SJCL pushing consumers down the right path and issuing warnings around primitives that have issues. For example the warning against using CBC. I discuss this further in the risks that solution causes section.

Other than the countermeasure, This is probably the best offering we have for crypto in the browser. It has sensible defaults, good warnings, not to many options to understand in order to make good choices. This is one of those libraries that guides the developer down the right path.

Encrypts your plain text using the AES block cypher with a choice of cipher modes CCM or OCB2. AES is the industry-standard block-cipher for this purpose, one of the better choices for crypto in the browser if it must be done. AES comes in three forms. 128 bit (very efficient), 192 bit and 256 bit. The modes of operation that SJCL have provided for use with AES are the following two Authenticated Encryption with Associated Data (AEAD) ciphers:

- AES-CCM. Counter with CBC-MAC (CCM) is a mode of operation for cryptographic block ciphers of 128 bits in length. It is an authenticated encryption algorithm designed to provide both authentication and confidentiality

- AES-OCB2. Offset CodeBook (OCB) is also a mode of operation for cryptographic block ciphers of 128 bits in length. OCB2 is an authenticated encryption algorithm designed to provide both authentication and confidentiality. OCB1 didn’t allow associated data to be included with the message. Integrity verification is now part of the decryption step with OCB2. GCM is similar to OCB, but GCM and CCM don’t have any patents. Although exemptions have been granted so that OCB can be used in software licensed under the GNU General Public License

- Uses PBKDF2 for One way hashing of passwords. More details around password hashing in the Data-store Compromise section.

-

Forge

- Source Code: https://github.com/digitalbazaar/forge

- NPM package: https://www.npmjs.com/package/node-forge

- Forge is a JavaScript library that provides a native implementation of TLS and a large toolbox containing utilities and implementations (although many considered insecure now) for:

- Transports: TLS, HTTP, SSH, XHR, Sockets

- Ciphers: AES, 3DES, DES and RC2

- Cipher Modes: GCM, ECB, CBC, CFB, OFB, and CTR

- Asymmetric cryptography: RSA, RSA-KEM, X.509, PKCS#5, PKCS#7, PKCS#8, PKCS#10, PKCS#12 and ASN.1

- Message Digests: SHA1, SHA256, SHA384, SHA512, MD5 and HMAC

- Utilities: Prime number generator, pseudo-random number generator

-

OpenPGPjs

- Home page: https://openpgpjs.org/

- Source Code: https://github.com/openpgpjs/openpgpjs

-

SecurityDriven.Inferno

Although it’s not a client side library, it’s still worth mentioning, because it’s opinionated and from the looks of it, Stan Drapkin is making good decisions and focussing on the best of bread components.- Source Code: https://github.com/sdrapkin/SecurityDriven.Inferno

- Documentaion: http://securitydriven.net/inferno/

- NuGet package: https://www.nuget.org/packages/Inferno/

Consuming Free and Open Source

This is where A9 (Using Components with Known Vulnerabilities) of the 2017 OWASP Top 10 comes in.

We are consuming far more free and open source libraries than we have ever before. Much of the code we are pulling into our projects is never intentionally used, but is still adding surface area for attack. Much of it:

- Is not thoroughly tested for what it should do and what it should not do. We are often relying on developers we do not know a lot about to have not introduced defects. As I discussed in the “Code Review” section of the Process and Practises chapter of Fascicle 0, most developers are more focused on building than breaking, they do not even see the defects they are introducing.

- Is not reviewed evaluated. That is right, many of the packages we are consuming are created by solo developers with a single focus of creating and little to no focus of how their creations can be exploited. Even some teams with a security champion are not doing a lot better.

- Is created by amateurs that could and do include vulnerabilities. Anyone can write code and publish to an open source repository. Much of this code ends up in our package management repositories which we consume.

- Does not undergo the same requirement analysis, defining the scope, acceptance criteria, test conditions and sign off by a development team and product owner that our commercial software does.

There are some very sobering statistics, also detailed in “the morning paper” by Adrian Colyer, on how many defective libraries we are depending on. We are all trying to get things done faster, and that in many cases means consuming someone else’s work rather than writing our own code.

Many vulnerabilities can hide in these external dependencies. It is not just one attack vector any more, it provides the opportunity for many vulnerabilities to be sitting waiting to be exploited. If you do not find and deal with them, I can assure you, someone else will.

Running any type of scripts from non local sources without first downloading and inspecting them, and checking for known vulnerabilities, has the potential to cause massive damage, for example, destroy or modify your systems and any other that may be reachable, send sensitive information to an attacker, or many other types of other malicious activity.

Insufficient Attack Protection

There is a good example of what the Insecure Direct Object References risk looks like in the NodeGoat web application. Check out the tutorial, along with the video of how the attack is played out, along with the sample code and recommendations of how to fix.

Lack of Active Automated Prevention

The web application is being attacked with unusual requests.

Attackers probe for many types of weaknesses within the application, when they think they find a flaw, they attempt to learn from this and refine their attack technique.

Attackers have budgets just like application developers/defenders. As they get closer to depleting their budget without gaining a foothold, they become more impulsive, start making more noise, and mistakes creep into their techniques, which makes it even more obvious that their probes are of a malicious nature.

3. SSM Countermeasures

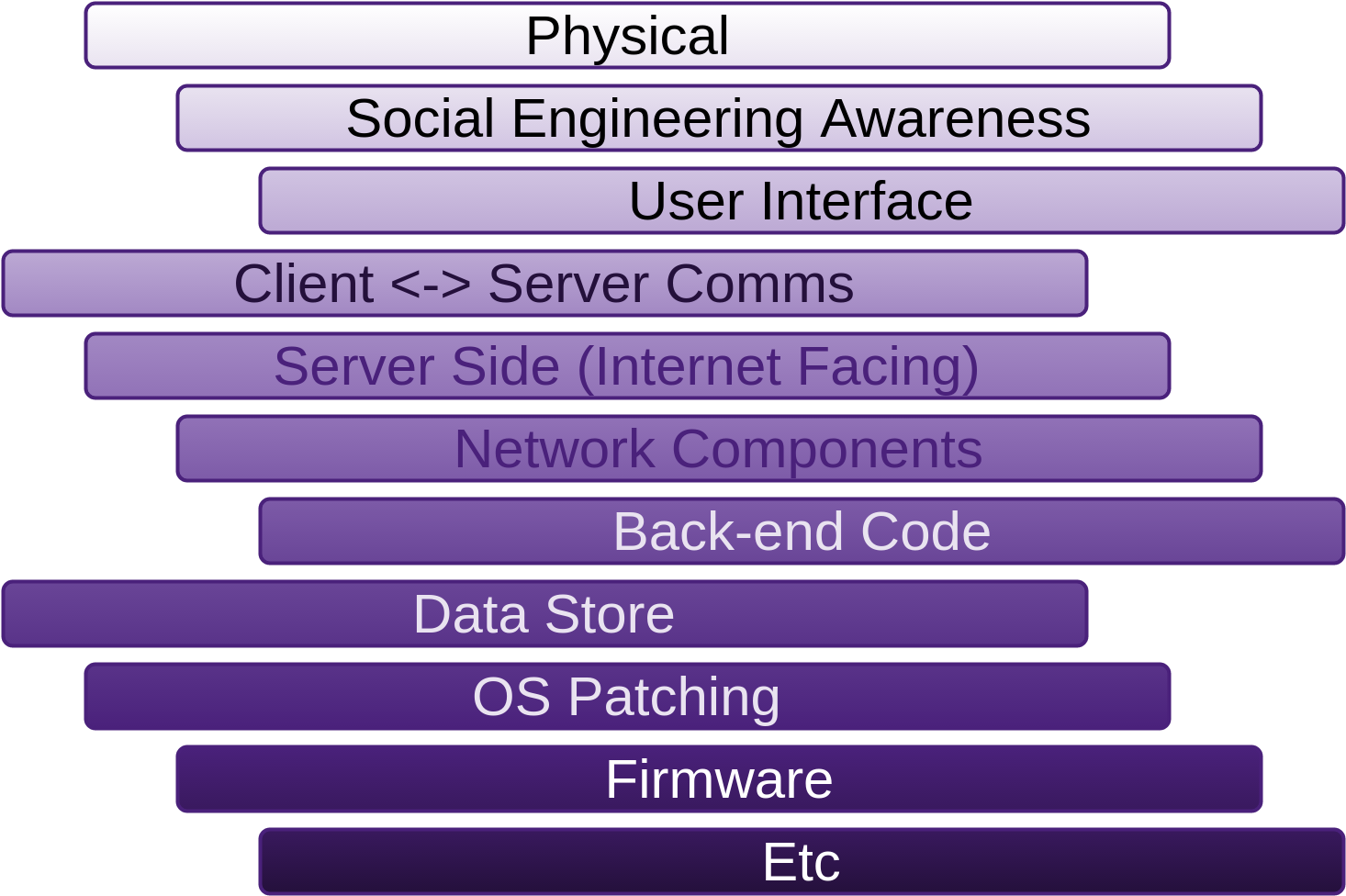

Every decision made in a project needs to factor in security. Just as with other non functional requirements, retrofitting means undoing what you’ve already done and rebuilding. Maintaining the mindset of going back later and bolting on security doesn’t work.

Often I’ll hear people say “well we haven’t been hacked so far”. This shows a lack of understanding. I usually respond with “That you are aware of”. Many of the most successful attacks go unnoticed. Even if you or your organisation haven’t been compromised, business’s are changing all the time, along with the attack surface and your assets. It’s more so a matter of when, than if.

One of the additional resources useful at this stage is the MS Application Threats and Countermeasures.

Lack of Visibility

Also refer to the “Lack of Visibility” section in the VPS chapter, where I discuss a number of tried and tested solutions. Much of what we discuss here will also make use of, and in some cases, such as the logging and monitoring depend on components being set-up from the VPS chapter’s Countermeasures sections within the Lack of Visibility sections.

As Bruce Schneier said: “Detection works where prevention fails and detection is of no use without response”. This leads us to application logging.

With good visibility we should be able to see anticipated and unanticipated exploitation of vulnerabilities as they occur and also be able to go back and review/audit the events. Of course you’re still going to need someone engaged enough (discussed in the People chapter of Fascicle 0) to be reviewing logs and alerts.

Insufficient Logging

When it comes to logging in NodeJS, you can’t really go past winston. It has a lot of functionality and what it does not have is either provided by extensions, or you can create your own. It is fully featured, reliable and easy to configure like NLog in the .NET world.

I also looked at express-winston, but could not see why it needed to exist.

{

...

"dependencies": {

...,

"config": "^1.15.0",

"express": "^4.13.3",

"morgan": "^1.6.1",

"//": "nodemailer not strictly necessary for this example,",

"//": "but used later under the node-config section.",

"nodemailer": "^1.4.0",

"//": "What we use for logging.",

"winston": "^1.0.1",

"winston-email": "0.0.10",

"winston-syslog-posix": "^0.1.5",

...

}

}

winston-email also depends on nodemailer.

Opening UDP port

with winston-syslog, it seems to be what a lot of people are using. I think it may be due to the fact that winston-syslog is the first package that works well for winston and syslog.

If going this route, you will need the following in your /etc/rsyslog.conf:

$ModLoad imudp

# Listen on all network addresses. This is the default.

$UDPServerAddress 0.0.0.0

# Listen on localhost.

$UDPServerAddress 127.0.0.1

$UDPServerRun 514

# Or the new style configuration.

Address <IP>

Port <port>

# Logging for your app.

local0.* /var/log/yourapp.log

I Also looked at winston-rsyslog2 and winston-syslogudp, but they did not measure up for me.

If you do not need to push syslog events to another machine, and I don’t mean pushing logs, then it does not make much sense to push through a local network interface when you can use your posix syscalls as they are faster and safer. Line 7 below shows the open port.

1 root@kali:~# nmap -p514 -sU -sV <target IP> --reason

2

3 Starting Nmap 6.47 ( http://nmap.org )

4 Nmap scan report for kali (<target IP>)

5 Host is up, received arp-response (0.0015s latency).

6 PORT STATE SERVICE REASON VERSION

7 514/udp open|filtered syslog no-response

8 MAC Address: 34:25:C9:96:AC:E0 (My Computer)

Using Posix

The winston-syslog-posix package was inspired by blargh. winston-syslog-posix uses node-posix.

If going this route, you will need the following in your /etc/rsyslog.conf instead of the above, you will still be able to push logs off-site, as discussed in the VPS chapter under the Logging and Alerting section in Countermeasures:

# Logging for your app.

local0.* /var/log/yourapp.log

Now you can see on line 7 below that the syslog port is no longer open:

1 root@kali:~# nmap -p514 -sU -sV <target IP> --reason

2

3 Starting Nmap 6.47 ( http://nmap.org )

4 Nmap scan report for kali (<target IP>)

5 Host is up, received arp-response (0.0014s latency).

6 PORT STATE SERVICE REASON VERSION

7 514/udp closed syslog port-unreach

8 MAC Address: 34:25:C9:96:AC:E0 (My Computer)

Logging configuration should not be in the application startup file. It should be in the configuration files. This is discussed further under the “Store Configuration in Configuration files” section.

Notice the syslog transport in the configuration below starting on line 39.

1 module.exports = {

2 logger: {

3 colours: {

4 debug: 'white',

5 info: 'green',

6 notice: 'blue',

7 warning: 'yellow',

8 error: 'yellow',

9 crit: 'red',

10 alert: 'red',

11 emerg: 'red'

12 },

13 // Syslog compatible protocol severities.

14 levels: {

15 debug: 0,

16 info: 1,

17 notice: 2,

18 warning: 3,

19 error: 4,

20 crit: 5,

21 alert: 6,

22 emerg: 7

23 },

24 consoleTransportOptions: {

25 level: 'debug',

26 handleExceptions: true,

27 json: false,

28 colorize: true

29 },

30 fileTransportOptions: {

31 level: 'debug',

32 filename: './yourapp.log',

33 handleExceptions: true,

34 json: true,

35 maxsize: 5242880, //5MB

36 maxFiles: 5,

37 colorize: false

38 },

39 syslogPosixTransportOptions: {

40 handleExceptions: true,

41 level: 'debug',

42 identity: 'yourapp_winston'

43 //facility: 'local0' // default

44 // /etc/rsyslog.conf also needs: local0.* /var/log/yourapp.log

45 // If non posix syslog is used, then /etc/rsyslog.conf or one

46 // of the files in /etc/rsyslog.d/ also needs the following

47 // two settings:

48 // $ModLoad imudp // Load the udp module.

49 // $UDPServerRun 514 // Open the standard syslog port.

50 // $UDPServerAddress 127.0.0.1 // Interface to bind to.

51 },

52 emailTransportOptions: {

53 handleExceptions: true,

54 level: 'crit',

55 from: '[email protected]',

56 to: '[email protected]',

57 service: 'FastMail',

58 auth: {

59 user: "yourusername_alerts",

60 pass: null // App specific password.

61 },

62 tags: ['yourapp']

63 }

64 }

65 }

In development I have chosen here to not use syslog. You can see this on line 3 below. If you want to test syslog in development, you can either remove the logger object override from the devbox1-development.js file or modify it to be similar to the above. Then add one line to the /etc/rsyslog.conf file to turn on. As mentioned in a comment above in the default.js config file on line 44.

1 module.exports = {

2 logger: {

3 syslogPosixTransportOptions: null

4 }

5 }

In production we log to syslog and because of that we do not need the file transport you can see configured starting on line 30 above in the default.js configuration file, so we set it to null as seen on line 6 below in the prodbox-production.js file.

I have gone into more depth about how we handle syslogs in the VPS chapter under the Logging and Alerting section, where all of our logs including these ones get streamed to an off-site syslog server. Thus providing easy aggregation of all system logs into one user interface that DevOpps can watch on their monitoring panels in real-time and also easily go back in time to visit past events. This provides excellent visibility as one layer of defence.

There were also some other options for those using Papertrail as their off-site syslog and aggregation PaaS, but the solutions were not as clean as simply logging to local syslog from your applications and then sending off-site from there. Again this is discussed in more depth in the “Logging and Alerting” section in the VPS chapter.

1 module.exports = {

2 logger: {

3 consoleTransportOptions: {

4 level: {},

5 },

6 fileTransportOptions: null,

7 syslogPosixTransportOptions: {

8 handleExceptions: true,

9 level: 'info',

10 identity: 'yourapp_winston'

11 }

12 }

13 }

// Build creates this file.

module.exports = {

logger: {

emailTransportOptions: {

auth: {

pass: 'Z-o?(7GnCQsnrx/!-G=LP]-ib' // App specific password.

}

}

}

}

The logger.js file wraps and hides extra features and transports applied to the logging package we are consuming.

var winston = require('winston');

var loggerConfig = require('config').logger;

require('winston-syslog-posix').SyslogPosix;

require('winston-email').Email;

winston.emitErrs = true;

var logger = new winston.Logger({

// Alternatively: set to winston.config.syslog.levels

exitOnError: false,

// Alternatively use winston.addColors(customColours); There are many ways

// to do the same thing with winston

colors: loggerConfig.colours,

levels: loggerConfig.levels

});

// Add transports. There are plenty of options provided and you can add your own.

logger.addConsole = function(config) {

logger.add (winston.transports.Console, config);

return this;

};

logger.addFile = function(config) {

logger.add (winston.transports.File, config);

return this;

};

logger.addPosixSyslog = function(config) {

logger.add (winston.transports.SyslogPosix, config);

return this;

};

logger.addEmail = function(config) {

logger.add (winston.transports.Email, config);

return this;

};

logger.emailLoggerFailure = function (err /*level, msg, meta*/) {

// If called with an error, then only the err param is supplied.

// If not called with an error, level, msg and meta are supplied.

if (err) logger.alert(

JSON.stringify(

'error-code:' + err.code + '. '

+ 'error-message:' + err.message + '. '

+ 'error-response:' + err.response + '. logger-level:'

+ err.transport.level + '. transport:' + err.transport.name

)

);

};

logger.init = function () {

if (loggerConfig.fileTransportOptions)

logger.addFile( loggerConfig.fileTransportOptions );

if (loggerConfig.consoleTransportOptions)

logger.addConsole( loggerConfig.consoleTransportOptions );

if (loggerConfig.syslogPosixTransportOptions)

logger.addPosixSyslog( loggerConfig.syslogPosixTransportOptions );

if (loggerConfig.emailTransportOptions)

logger.addEmail( loggerConfig.emailTransportOptions );

};

module.exports = logger;

module.exports.stream = {

write: function (message, encoding) {

logger.info(message);

}

};

When the app first starts it initialises the logger on line 15 below.

1 var http = require('http');

2 var express = require('express');

3 var path = require('path');

4 var morganLogger = require('morgan');

5 // Due to bug in node-config the next line is needed before config

6 // is required: https://github.com/lorenwest/node-config/issues/202

7 if (process.env.NODE_ENV === 'production')

8 process.env.NODE_CONFIG_DIR = path.join(__dirname, 'config');

9 // Yes the following are hoisted, but it's OK in this situation.

10 var logger = require('./util/logger'); // Or use requireFrom module so no relative paths.

11 //...

12 var errorHandler = require('errorhandler');

13 var app = express();

14 //...

15 logger.init();

16 app.set('port', process.env.PORT || 3000);

17 app.set('views', __dirname + '/views');

18 app.set('view engine', 'jade');

19 //...

20 // In order to utilise connect/express logger module in our third party logger,

21 // Pipe the messages through.

22 app.use(morganLogger('combined', {stream: logger.stream}));

23 app.use(methodOverride());

24 app.use(bodyParser.json());

25 app.use(bodyParser.urlencoded({ extended: true }));

26 app.use(express.static(path.join(__dirname, 'public')));

27 //...

28 require('./routes')(app);

29

30 if ('development' == app.get('env')) {

31 app.use(errorHandler({ dumpExceptions: true, showStack: true }));

32 //...

33 }

34 if ('production' == app.get('env')) {

35 app.use(errorHandler());

36 //...

37 }

38

39 http.createServer(app).listen(app.get('port'), function(){

40 logger.info(

41 "Express server listening on port " + app.get('port') + ' in '

42 + process.env.NODE_ENV + ' mode'

43 );

44 });

- You can also optionally log JSON metadata

- You can provide an optional callback to do any work required, which will be called once all transports have logged the specified message.

Here are some examples of how you can use the logger. The logger.log(level can be replaced with logger.<level>( where level is any of the levels defined in the default.js configuration file above:

// With string interpolation also.

logger.log('info', 'test message %s', 'my string');

logger.log('info', 'test message %d', 123);

logger.log('info', 'test message %j', {aPropertyName: 'Some message details'}, {});

logger.log(

'info', 'test message %s, %s', 'first', 'second',

{aPropertyName: 'Some message details'}

);

logger.log(

'info', 'test message', 'first', 'second', {aPropertyName: 'Some message details'}

);

logger.log(

'info', 'test message %s, %s', 'first', 'second',

{aPropertyName: 'Some message details'}, logger.emailLoggerFailure

);

logger.log(

'info', 'test message', 'first', 'second',

{aPropertyName: 'Some message details'}, logger.emailLoggerFailure

);

As an architectural concern, also consider hiding cross cutting concerns like logging using Aspect Oriented Programming (AOP).

Insufficient Monitoring

There are a couple of ways of approaching monitoring. You may want to see and be notified of the health of your application only when it is not fine (sometimes called the dark cockpit approach), or whether it is fine or not. Personally I like to have both

Dark Cockpit

As discussed in the VPS chapter, Monit is an excellent tool for the dark cockpit approach. It’s easy to configure. Monit Has excellent easy to read, short documentation which is easy to understand, the configuration file has lots of examples commented out ready for you to take as is and modify to suite your environment. Remember I provided examples of monitoring a VPS and NodeJS web application in the VPS chapter. I’ve personally had excellent success with Monit. Check the VPS chapter Monitoring section for a refresher. Monit doesn’t just give you monitoring, it can also perform pre-defined actions based on current states of many VPS resources and their applications.

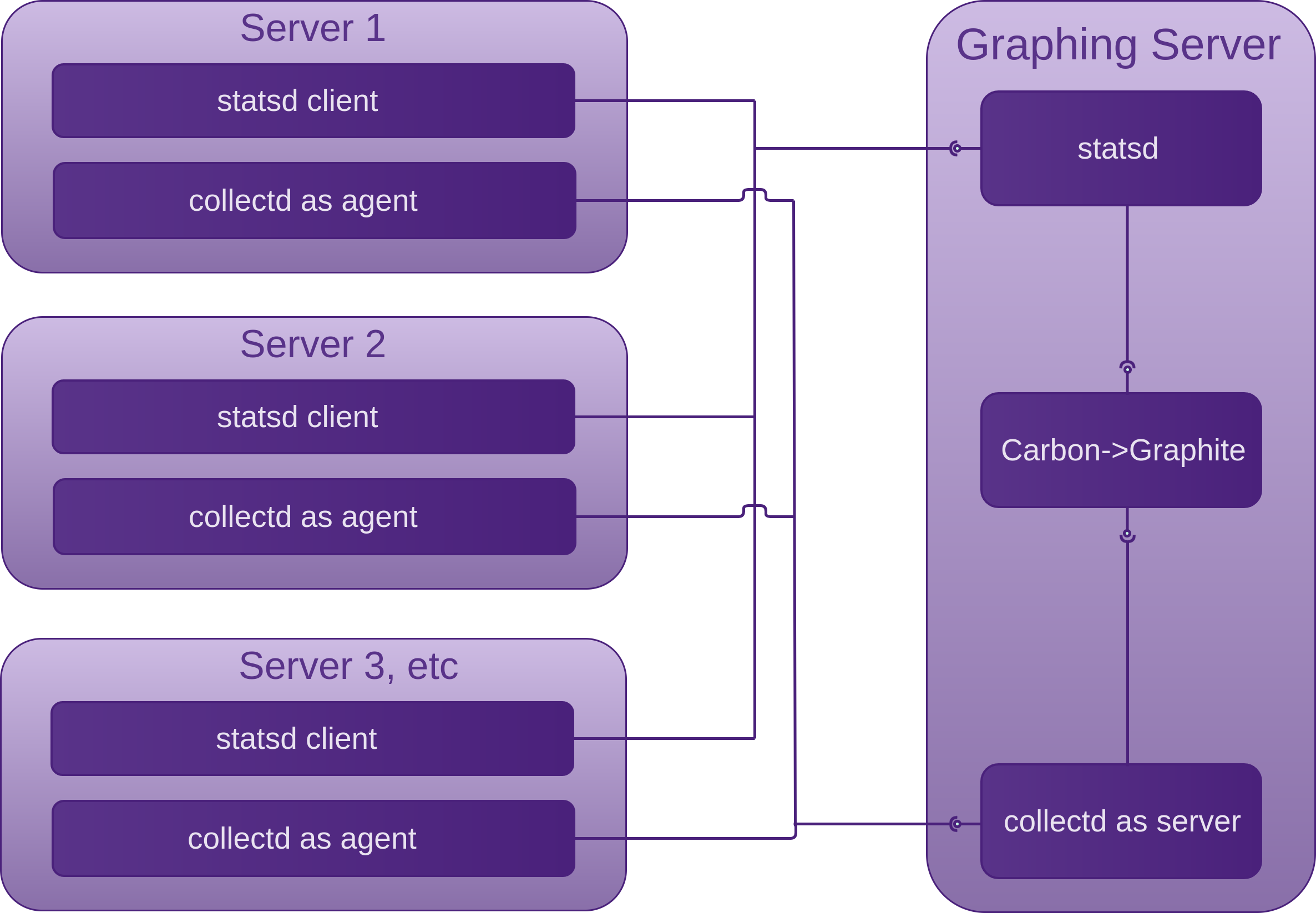

Statistics Graphing

Continuing on with the Statistics Graphing section in the VPS chapter, we look at adding statsd as application instrumentation to our existing collectd -> graphite set-up.

Just as collectd can collect and send data to graphite directly if collectd agent and server are on the same machine, or indirectly via a collectd server on another machine to provide continual system visibility, statsd can play a similar role as collectd agent/server but for our applications.

Statsd is a lightweight NodeJS daemon that collects and stores the statistics sent to it for a configurable amount of time (10 seconds by default) by listening for UDP packets containing them. Statsd then aggregates the statistics and flushes a single value for each statistic to its backends (graphite in our case) using a TCP connection. The flushInterval needs to be the same as the retentions interval in the Carbon /etc/carbon/storage-schemas.conf file. This is how statsd gets around the Carbon limitation of only accepting a single value per interval. The protocol that statsd expects to receive looks like the following, expecting a type in the third position instead of the timestamp that Carbon expects:

Where <type> is one of the following:

This tells statsd to add up all of these values that it receives for a particular statistic during the flush interval and send the total on flush. A sample rate can also be provided from the statsd client as a decimal of the number of samples per event count:

<metric-name>:<actual-value>|c[|@<sample-rate>]

So if the statistic is only being sampled 1/10th of the time:

<metric-name>:<actual-value>|c|@0.1

This value needs to be the timespan in milliseconds between a start and end time. This could be for example, the timespan that it took to hash a piece of data to be stored such as a password, or how long it took to pre-render an isomorphic web view. Just as with the count type, you can also provide a sample rate for timing as well. Statsd does quite a lot of work with timing data, it works out percentiles, mean, standard deviation, sum, lower and upper bounds for the flush interval. This can be very useful for when you are making changes to your application and want to know if those changes are slowing it down.

A gauge is a snap-shot of a reading in your application code, like your cars fuel gauge for example. As opposed to the count type which is calculated by statsd, a gauge is calculated at the statsd client.

Sets allow you to send the number of unique occurrences of events between flushes, so for example you could send the source address of every request to your web application and statsd would workout the number of unique source requests per flush interval.

So for example if you have statsd running on a server called graphing-server with the default port, you can test sending a count metric with the following command:

The server and port are specified in the config file that you create for yourself. You can create this from the exampleConfig.js as a starting point. In exampleConfig.js you will see the server and port properties. The current options for server are tcp or udp, with udp being the default. The server file must exist in the ./servers/ directory.

One of the ways we can generate statistics to send to our listening statsd daemon is by using one of the many language specific statsd clients, which make it trivially easy to collect and send application statistics via a single routine call.

Lack of Input Validation, Filtering and Sanitisation

Generic

What ever you can do to help establish clean lines of separation of concerns in terms of both domain and technology, and keep as much as possible as simple as possible, the harder it will be for defects and malicious code to hide.

Your staple practises when it comes to defending against potentially dangerous input are validation and filtering. There are cases though when the business requires that input must be accepted that is dangerous yet still valid. This is where you will need to implement sanitisation. There is a lot more research and thought involved when you need to perform sanitisation, so the first cause of action should be to confirm that the specific dangerous yet valid input is in-fact essential.

Recommendations:

Research:

- Libraries

- The execution contexts that your data will flow through and / or be placed in

- Which character signatures need to be sanitised

Attempt to use well tested, battle hardened language specific libraries that know how to validate, filter and sanitise.

Create enough “Evil Test Conditions” as discussed in the Process and Practises chapter of Fascicle 0 to verify that:

-

Validation

- Only white listed characters can be received (both client and server side)

- Maximum and minimum field lengths are enforced

- Constrain fields to well structured data, like: credit card numbers, dates, social security numbers, area codes, e-mail addresses, etc. The more you can define and constrain the types of data that is permitted in any given field, the easier it is to apply a white list and validate effectively.

WebComponents have come a long way and I think thy are perfect for this task.

With WebComponents, you get to create your own custom elements, an HTML tag. The browser will understand these natively, so they will work with any framework or library you are using. In order to define your own custom element, the tag name you define must be all lower case and must contain at least one hyphen used for separating name-spaces. No elements will be added to HTML, SVG or MathML that contain hyphens.

Each Custom Element (

my-passwordfor example) has a corresponding HTML Import (my-password.htmlfor example) that provides the definition of the Custom Element, behaviour (JavaScript), DOM structure and styling. Nothing in the Custom Elements HTML Import can leak out, it is a component, encapsulated. Styles can also not leak in. So as our applications continue to grow, Custom Elements are a great tool for modularising concerns.Custom Elements are currently only natively supported in Chrome and Opera, but we have the webcomponents.js set of polyfills which means we can all use WebComponents.

Polymer is a library that helps you write WebComponents and mediates with the browser on your behalf, it also polyfills.

Custom Element authors can also expose Custom CSS properties that they think consumers may want to apply values to, these styles are prefixed with

--and are essentially an interface to a backing (CSS property) field, which would otherwise be inaccessible.The Custom Element author can also decide to define a set of CSS properties as a single Custom CSS property, called a Custom CSS mixin, and then allow all of the properties within the set to be applied to a specific CSS rule in an elements local DOM. This is done using the CSS @apply rule. This allows consumers to mix in any styles within the single Custom CSS property, but only intentionally by using the

--prefix.Polymer also has a large collection of Custom Elements already created for you out of the box. Some of these Custom Elements are perfect for constraining and providing validation and filtering of input types, credit card details for example.

- You have read, understood and implemented Validation as per Identify Risks section

-

Filtering

- Your filtering routines are doing as expected with valid and non valid input (both client and server side)

- You have read, understood and implemented Filtering as per Identify Risks section

-

Sanitisation

- All valid characters that need some modification are modified. Modifying could simply be a case of for example rounding a float down (removing precision), or changing the case of an alpha character. Not usually a security issue.